Alchemy doesn’t scale: the economics of general intelligence

Even huge leaps forward in capabilities don’t alter basic reality

Prefer audio? Listen to this essay here.

Introduction

Over the past few weeks, we’ve been focusing on technical pragmatism. Our belief is that successful entrepreneurs pick the right tools for the job and relentlessly focus on stepwise technical derisking and customer value first and foremost. Sometimes this means using the shiniest and most expensive technology available, but often it doesn’t. In both our piece on frontier model economics and on hardware alchemy, we argued that a combination of hype, FOMO, and the easy availability of VC-backed spending subsidy was encouraging us to believe that the laws of gravity no longer applied.

One variety of pushback has been that we’re clinging to an outdated paradigm, which will be swept aside by the advent of some form of ‘general intelligence’. The argument here is that increasingly powerful frontier models will unlock the possibility of agents that can quickly learn and adapt to new tasks, combine skills and knowledge in novel ways, and handle the ambiguity and open-endedness of real-world environments, all to superhuman standards. It is, as Jean Piaget the Swiss psychologist, put it, what you use when you don't know what to do. And all of this at a cost that is so cheap that access will be abundant for all.

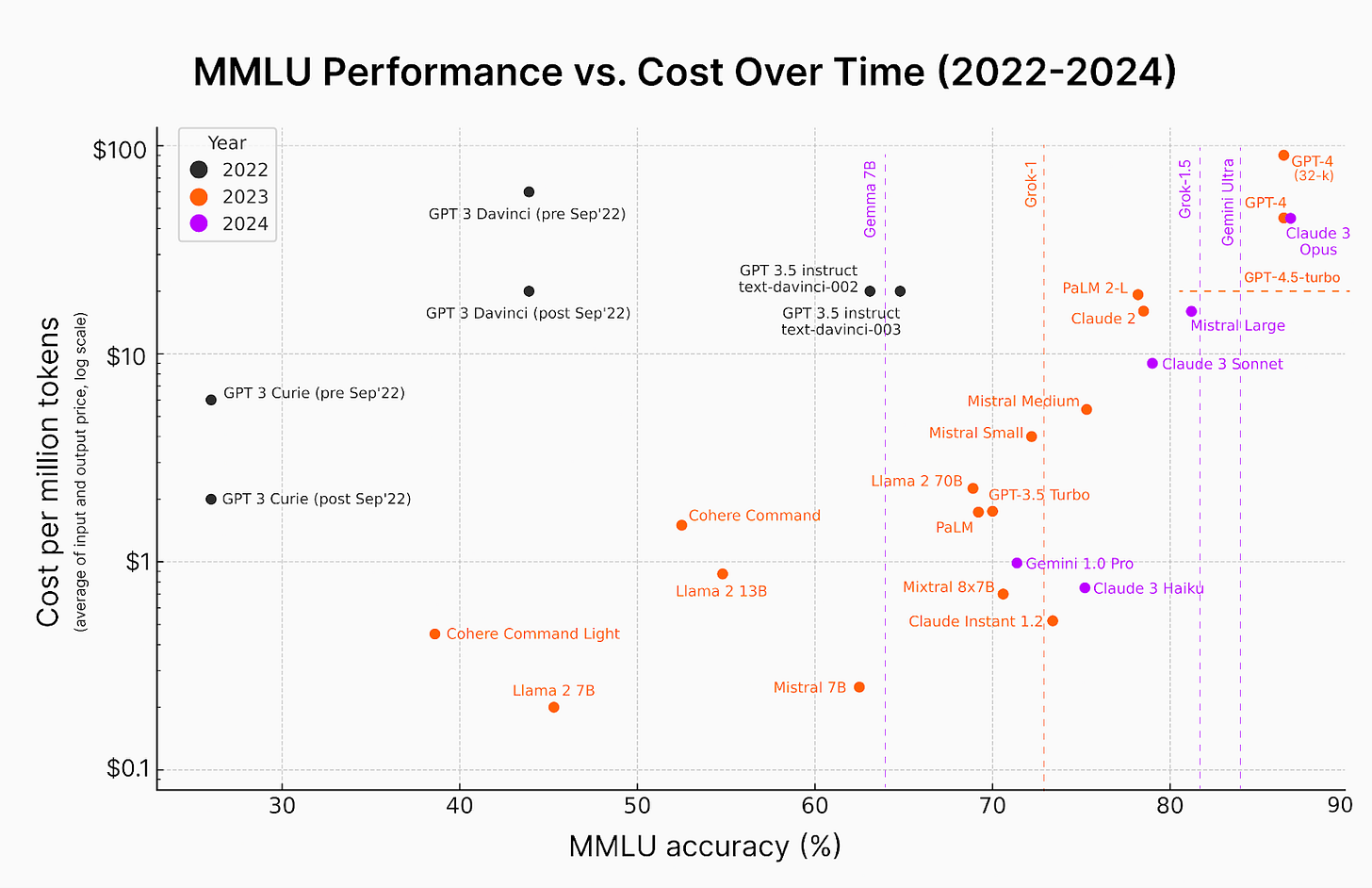

This will unleash so much in the way of economic gains that our prosaic concerns about margins, profitability, and sustainability will be solved. If certain dramatic predictions are correct, we should be able to discern the imminence of this from just looking at (highly scientific) extrapolations on graphs:

Regular readers won’t be surprised to see us raise an eyebrow (or both) at these kinds of predictions. Even so, we think it’s worth taking the idea seriously because we do believe that strong general models will likely see further dramatic increases in capability.

So today, we’re asking, if something approaching ‘general intelligence’ did begin to become available, does it invalidate our thesis?

Taking a step back

In some sense, this question of whether we’ll use the most performant general intelligence system for every and all tasks regardless of their nature is essentially something that can be boiled down to a handful of basic, long-standing questions that we apply outside AI constantly. Let’s go through them:

Performance, for a price

Firstly, there’s the trade off between cost and performance. Were such a high-powered general model to exist, it would likely come with a hefty accompanying compute tag, from both building the model and running it at scale. This would likely render it overpriced and overpowered for many enterprise applications.

This is obvious and intuitive in day-to-day life. Most people, even if they could stretch to it, are unlikely to opt for a Ferrari if they live in a built-up urban area, as it would involve paying for capabilities that they would never fully use. In the same way, the average enterprise user of an Apple computer doesn’t buy the highest specced version to run their many browser tabs, music service and messaging apps. Few people are motivated to pay $7k for Apple’s famed “cheese grater” Mac Pro with its 24-core CPU and 60- to 76-core GPU that they will never use.

The expensive, high spec option for most technology products can sometimes not just be over-priced, but actively deliver worse performance once specific user needs are taken into account. For example, despite a rush of initial excitement, custom drones produced by US start-ups have been largely ineffective in the Ukraine war versus their cheap, off-the-shelf Chinese counterparts.

Not only did they fail to live up to their benchmark performance on both range and payload capabilities, their high-powered designs actively worked against them. US drones’ advanced communications and anti-jamming systems, ironically designed to make them more secure, made them a bigger target for Russian electronic warfare. Meanwhile, the use of readily available, low-cost components in Chinese DJI drones makes them harder to specifically target, as they are sufficiently ubiquitous that they blend into other electronic noise.

In our previous piece on hardware, we gave the example of NVIDIA’s failure to conquer the mobile and tablet market with its Tegra system on a chip (SoC). NVIDIA’s traditional strength in high-quality graphics held up less well when a power cap was enforced by a battery. Its competitors could compete on quality, while offering a product at a lower price with longer battery life. NVIDIA eventually exited the market.

Researchers already navigate these trade-offs on a regular basis when working with LLMs…

General vs. specific purpose

The second trade-off is that of a general purpose versus a specialized tool. We’re confronted with this directly in AI already without requiring analogies from other sectors.

This can be where impressive benchmark performance by general systems can give a misleading impression about real-world applicability.

If we take medicine as an example, Microsoft published a paper last year, evaluating GPT-4’s capabilities on medical competency and benchmark datasets. They concluded that, without specialist prompt crafting, it outperformed models fine-tuned on medical knowledge like Google’s Med-PaLM. Med-PaLM 2 only narrowly edged out GPT-4 on MultiMedQA:

But this misses the point. No one doubts that there is a significant amount of medical knowledge encoded in powerful general purpose models, but this is not the same thing as real-world robustness. Medical exams are a proxy for testing the skills of a doctor, but they’re only a proxy - a doctor has many other skills they’re tested on day-to-day as part of a residency. Doing well on a proxy does not automatically mean doing well on whatever the proxy is standing in for. It would be like saying the proficiency in LeetCode equates to being a good software engineer.

This is why the Med-PaLM team took steps, including fine tuning, detailed human evaluation, adversarial testing, and a novel prompting strategy. It’s unlikely that even a highly powerful general model would be able to circumvent all of these steps and pass the regulatory approvals needed to be deployed in a clinical setting. And fine-tuning such a hypothetical system would likely be an enormous challenge, both due to the complexity and the significant computational resources required.

We’re also yet to see very powerful general purpose models demonstrate high levels of performance in tasks that require real-world interaction and adaptation (e.g. in robotics). Even models with very high parameter counts struggle to adapt to new, unforeseen situations in real-time and to coordinate responses across multiple modalities.

While foundation models excel at memorization, understanding and manipulating physical objects requires a deep understanding of concepts like causality, physics, and object permanence. This is why even powerful models, such as GPT-4, struggle as world simulators, once you move beyond common-sense tasks.

This is why companies working on high-stakes, embodied AI challenges, build their own specialist models, combining multiple modalities and domain-specific data.

Reality check

There’s, of course, the obvious pushback that future models will be sufficiently powerful that even the trade-offs don’t matter.

But this is where we think general intelligence speculation can veer off into the fantasy realm. Many of these predictions operate via reference to model scaling laws and treat the physical world as an afterthought.

They operate on the basis of a near-term utopia where the development costs are low, inference is cheap, hardware bottlenecks cease to exist, pricing is low, and capabilities are extremely high. The capacity of such systems will also be infinite, so there’ll be no downside to someone using it for a simple task while others simultaneously need it for something more complex. It is impossible to infer this from lines going up on graphs - the chances of two of these being true are small, all of them, vanishingly so.

We believe that general purpose foundation models will gain in capabilities significantly and disrupt a range of industries, but that we ultimately still live on planet earth and physical constraints still apply.

When it comes to hardware, NVIDIA is in a constant struggle to keep up with demand, as manufacturer TSMC has to maintain chip-on-wafer-on-substrate packaging capacity. Not to mention that manufacturing is parked in the middle of one of the world’s most fraught geopolitical hotspots, with the potential for war or blockade looming constantly.

While there are efforts by the US and others to build up their domestic manufacturing capabilities, these face challenges, ranging from missed deadlines through to permitting and regulatory challenges. The first two Arizona TSMC facilities, subsidized by the US CHIPS Act, will produce 600,000 wafers per year, versus the company’s current capability of 16 million. This is not to say they aren’t worthwhile projects, just that huge volumes of spare capacity aren’t about to come online in the US.

Maybe Sam Altman’s $7T chip empire will come into being, but again, on what sort of timeline? And it’s difficult to imagine it being possible to fund such an endeavor without relying on deep sovereign wealth pockets that the US may find uncomfortable for obvious reasons.

Then there’s the question of data center infrastructure. Mark Zuckerberg has already talked about exponential growth curves potentially requiring data centers of up to 1GW (versus 50-100 MW at the moment) - close to the size of a meaningful nuclear power plant. While this is technologically possible, the scale poses a challenge. Without even diving into the regulatory challenges, builders of standard-sized data centers are seeing years-long waits for back-up generators and cooling systems, struggling to find affordable real estate near the grid, while even basic components like cables and transistors can take months to source. That’s not to mention the strain on electricity grids, with governments around the world fearing for both supply and their climate targets.

It’s possible to argue that at some unspecified future point, governments will grasp the significance of imminent AGI, take control, and urgently move to sweep all of these obstacles aside. Aschenbrenner’s Situational Intelligence imagines this kind of government takeover of the sector. But as reassuring as it might be to some in the tech world, there are no government ninjas behind the curtain with a secret AGI plan.

Capacity is low, money is scarce, and expertise is lacking. AGI is also not comparable to past efforts where government and science have married up to great effect, like the Manhattan Project. There’s no agreement on what the aim is, the entire economy isn’t being commandeered towards one single purpose (i.e. winning a war), and we’re not currently living through the emergency suspension of large chunks of law and basic civil rights.

Were governments to step in to try to solve these problems, even with the best will in the world, they would take years. Even in China, where processes like construction are heavily streamlined, it can take 3 -5 years to build a semiconductor fab and 6-8 years to build a nuclear power plant. Even with the best will in the world, these challenges can’t be resolved overnight. A US government taskforce, even with a huge budget, cannot simultaneously resolve a chip, component, labor, land, and energy shortage. Be real.

Closing thoughts

This piece marks the third part of an unplanned trilogy on the importance of technical pragmatism. Our intention with this and our pieces on frontier models and hardware is not to pour cold water on progress in the field. Watching the talks at this year’s RAAIS summit (they’ll live on our YouTube channel), we were taken aback by the sheer diversity of challenges founders are now taking on. We also saw the integral role foundation models are now playing in the tech stacks of businesses working on everything from self-driving and video generation through to deciphering human biology.

But the thing all our speakers shared was a deep pragmatism. They flexed their approaches as the field evolved, they picked the right tool for the job, and they didn’t bet on trends. They were driven by science and pragmatism, not vibes.

Even the founders of very highly-capitalized businesses don’t operate on the basis that cheap money will remain cheap and that the laws of physics are set to be suspended perpetually. VC money and sovereign wealth isn’t going to subsidize compute indefinitely, especially as both the free market and research opens up alternative approaches.

Speculation about the future development of foundation models and watching the line go up on the graph is all very interesting. But fanfiction isn’t helpful for entrepreneurs, investors, governments, or anyone else approaching this space. It’s not a substitute for taking a cool-headed, reasoned look at the state of research, how a solution to a problem integrates into a workflow, or insight into a real-life customer trying to solve a real-life problem. Ultimately, this is what the builders grasp and the theoreticians miss. This our approach to investing and company building at Air Street.