91% of AI papers used NVIDIA in 2024

Final 2024 statistics for AI chip usage across NVIDIA, Google, Huawei, Apple, AMD, Cerebras, Cambricon, Graphcore, Groq, SambaNova, and Intel Habana in the State of AI Report Compute Index.

Introduction

For the 2022 edition of the State of AI Report, we launched the Compute Index with our data partners Zeta Alpha. Its goal is to track the size of the largest GPU computing systems across public and private clouds, as well as national resources. We also track the usage (and emergence of) specific AI accelerators in published AI research papers. This is a street-level view of which chips are the most or least popular as voted by AI researchers in their own work, which helps inform the industry’s higher level view of which chip companies are winning or losing (and by what margin).

So, back in 2022, here’s what the pictured looked like…

…an NVIDIA washout.

And this washout held for several years, in fact. It’s acutely felt by investors who made $6B worth of bets on AI chip competitors between 2016 and 2024. As of 9 October 2024, their money sat at a mark-to-market value of $31B. Had they invested the $6B into NVIDIA instead, they’d be sitting on $120B of value.

Today’s picture

As we close the books on our data from 2024, the overall scenery looks the same, but we see some definition emerging in the backdrop.

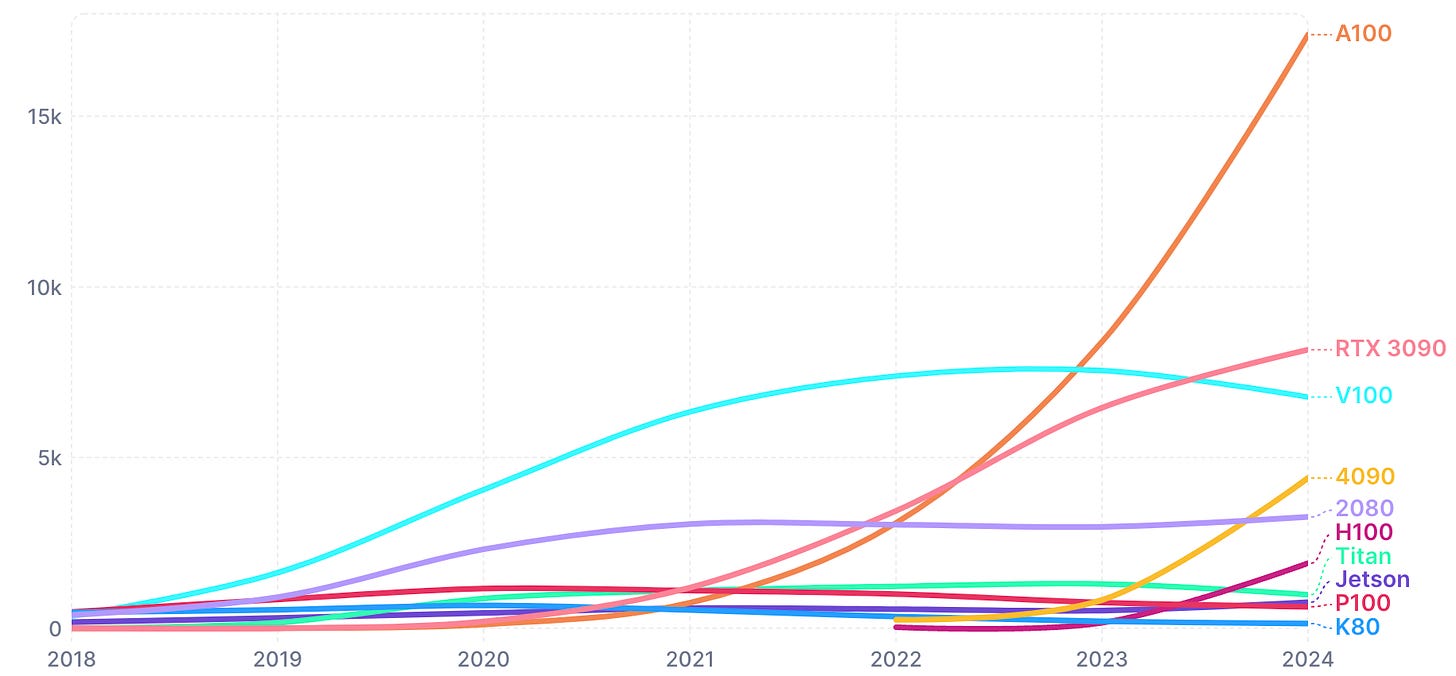

In the chart below, we plot the sum of all AI research papers that make use of chips from specific vendors, per year. For NVIDIA, this includes the 2080, RTX 3090, 4090, K80, P100, V100, A100, H100, Titan, and Jetson chips. The big 6 startups are Habana, Graphcore, Cerebras, Sambanova, Cambricon, and Groq. AMD chips include the MI250, MI300 and MI300X.

NVIDIA comes in at 44,389 papers published in 2024, Google TPUs clock 1,702 papers, big 6 startups at 586 papers, Apple at 604 papers, and AMD at 264 papers.

Clearly, we’re still living in an NVIDIA show: the company’s chips capture 91% of all AI research paper chip usage.

If we double click on NVIDIA’s chip lineup usage, we see that the A100 is still the most popular chip. The H100 is seeing rapid growth, while older cards such as the V100 hold their own.

Any contenders from big tech?

While the above picture is certainly stark, here’s what the YoY growth trends look like for specific chips. Of note, Google’s TPU usage has grown almost 1,000% YoY to account for 1,702 papers in 2024. Next up, Huawei, AMD and Apple are racking up usage, altogether totalling 955 papers. A ways to go.

Cerebras maintains its lead and Groq overtakes Graphcore

Over the summer, wafer-scale compute system company, Cerebras, launched their inference service and filed to IPO. Their chips have seen continued growth in AI research papers in 2024, notching them the number 1 spot for the second year in a row.

Meanwhile, Groq’s come out from nowhere to the number 2 spot. The company, founded by members of the original Google TPU team, are competing on the (very crowded) inference market too. Usage of Graphcore’s products, sold to SoftBank Group in the fall, is levelling out.

Closing thoughts

The NVIDIA show continues and long will that likely continue…