Embodied AI is hitting its stride

A deep dive into world models, VLAMs, planning layers and real deployments from robotics companies Sereact and Wayve - and what comes next for embodied AI.

12 months on

Last year, in our Embodied AI outtake from the State of AI Report, we argued that robotics had moved from being the “unloved cousin” of AI to an area undergoing a genuine renaissance driven by progress in foundation models. Since then, the field has advanced rapidly. On the research front, what was previously a collection of isolated systems is now beginning to reveal clearer architectural patterns from groups like AI2, Google DeepMind and NVIDIA, and on the commercial side, we’re seeing large-scale deployments of Embodied AI systems by robotics companies like Sereact and Wayve.

This essay revisits and updates our previous analysis with substantive progress in the field and potential future directions.

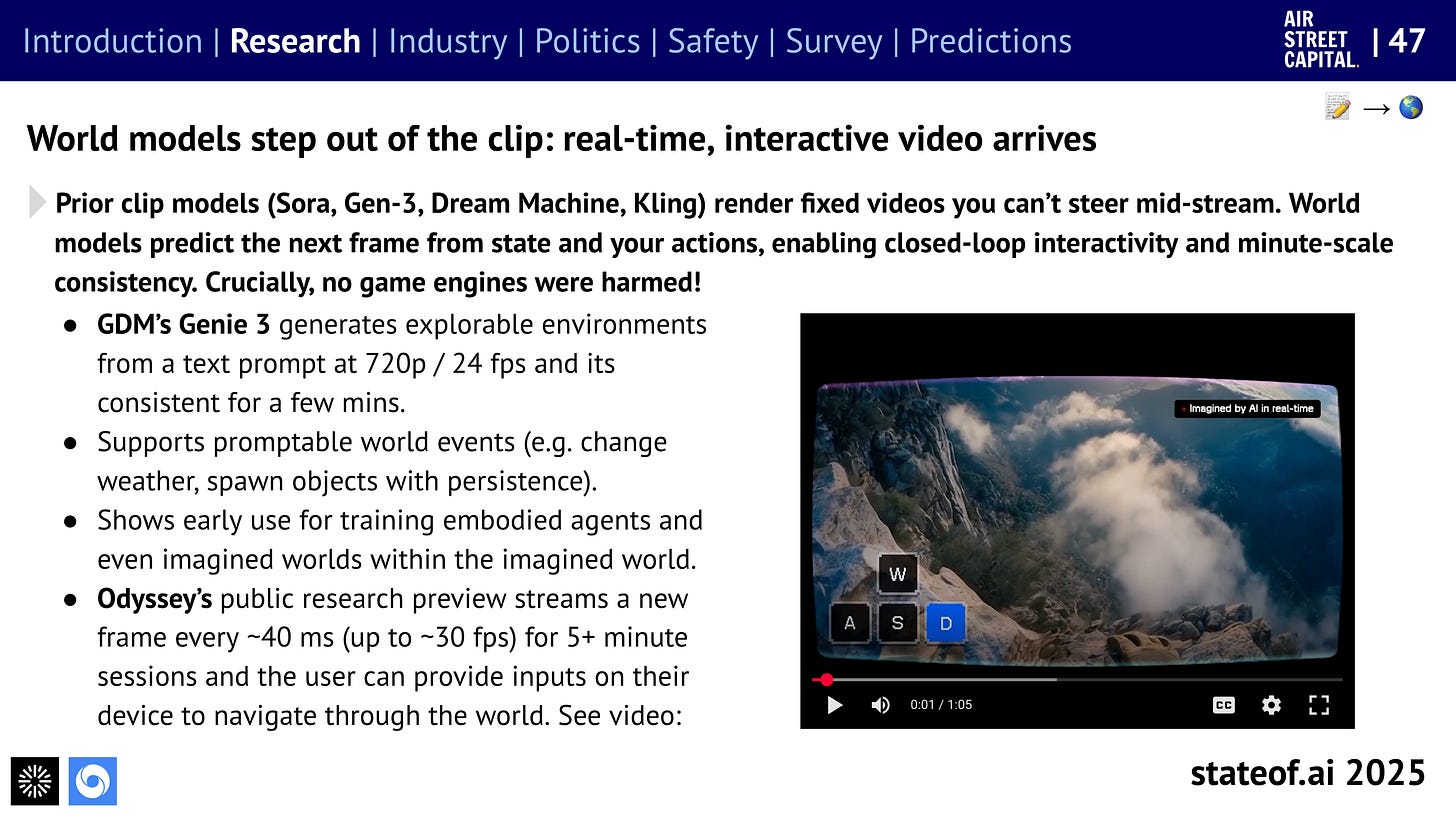

World models offer richer virtual playgrounds

In the last year, we’ve seen the release of Odyssey 2, Dreamer v4 and Genie-3, which all push beyond passive video prediction into richer, interactive environments. These models now support agent-conditioned rollouts, long-horizon temporal structure and even 3D persistence - capabilities that were unthinkable in early generative work. While these systems are yet to demonstrate sim-to-real transfer for robotics, this feels like their likely direction of travel.

This month, DeepMind’s SIMA-2 offered a clear glimpse of where this might go on the virtual side. In this system, a Gemini-powered agent plays 3D games, explains its plans and adapts to procedurally generated worlds created by models like Genie-3. Relatedly on the driving side, Wayve’s GAIA-2 uses a generative world model to roll out closed-loop driving trajectories in novel scenes, showing that similar ideas can be applied to real-world sensorimotor data.

Taken together, these systems suggest that world models are morphing into a high-potential training and evaluation substrate. Future embodied policies can be stress-tested cheaply, safely and at scale before being exposed to the real world.

Vision-Language-Action Models (VLAMs)

VLAMs have become the clearest expression of how the foundation‑model playbook is infusing new life into robotics. Instead of custom perception stacks and task‑specific controllers, robots are increasingly powered by large multimodal models that can interpret scenes, understand instructions and produce structured actions. This is a big deal.

Two architectural strategies currently dominate the research landscape:

Insulated approaches, where the VLM backbone is kept largely fixed and robot‑specific learning happens in compact action experts trained on modest datasets (e.g. models like π₀.₅, GR00T N1, and to some extent SmolVLA). This offers stability, preserves pretrained knowledge and reduces the risk of catastrophic forgetting.

End‑to‑end approaches, where perception, semantics and control are trained jointly (e.g. models like GR‑3, Gemini Robotics, RT-2). This unlocks richer grounding and enables models to internalise geometry, affordances and contact dynamics.

Despite their differences, these systems share a common thread: they consistently outperform older modular pipelines on generalisation, long‑horizon tasks and cross‑embodiment transfer. π₀.₅ executes multi‑step manipulation in entirely new homes. Gemini Robotics adapts a frontier foundation model to new robot bodies with limited additional data. GR00T N1 brings generalist humanoid control into the open‑weight ecosystem.

Taken together, they mark a meaningful concentration of progress toward large‑scale multimodal models that learn from broad data, reason over abstract goals and synthesize them into precise physical actions.

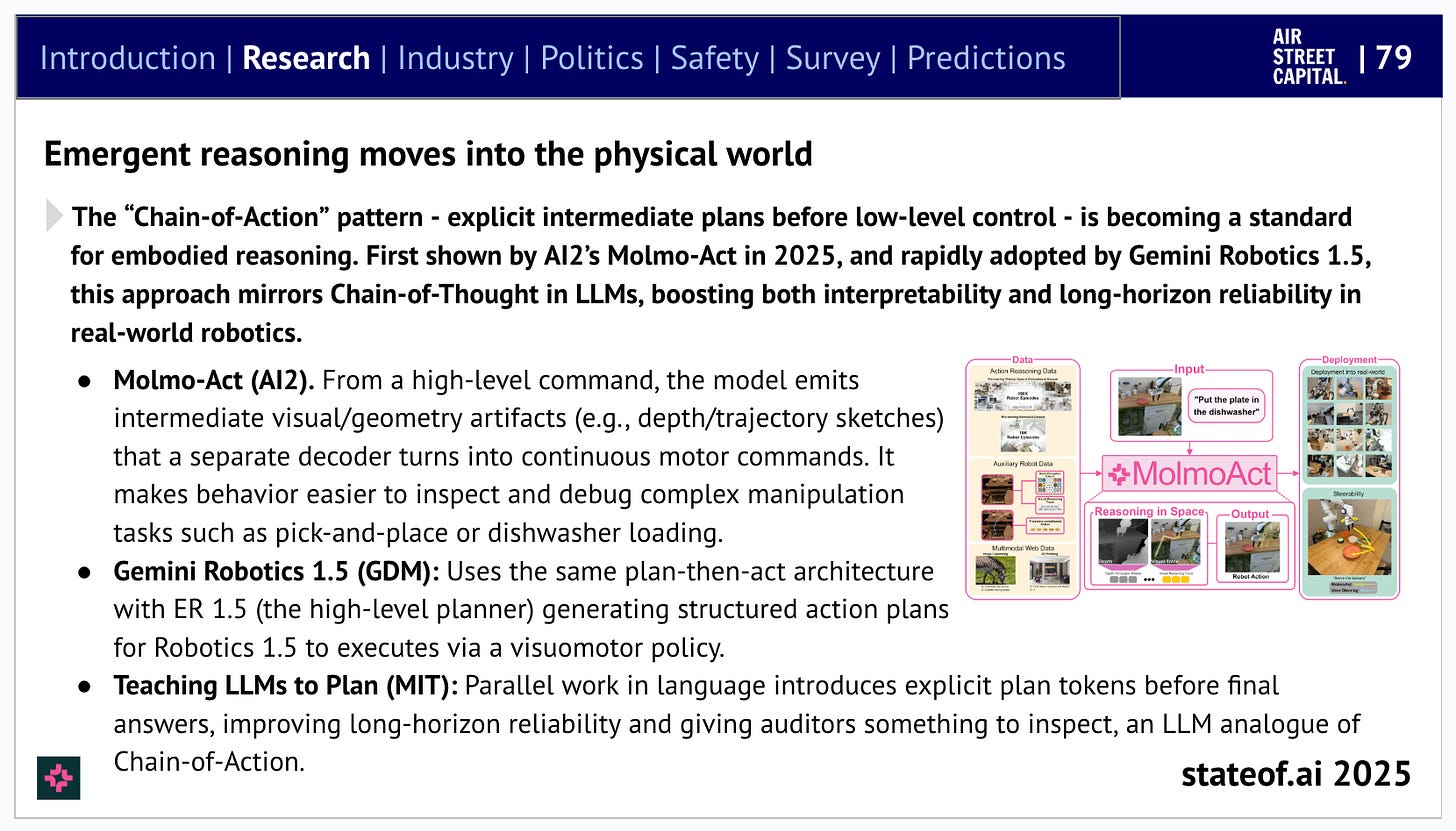

The rise of Chain-of-Action

A subtle but important shift is the move toward explicit planning layers. Instead of jumping straight from pixels to torques, newer systems produce a mid-level sequence of goal tokens, spatial anchors or trajectory hints - a kind of Chain-of-Action inspired by Chain-of-Thought in language models. This emerging pattern marks a notable departure from the traditional “end-to-end or bust” mentality that dominated early deep robotics.

These intermediate representations give embodied systems space to deliberate. They provide structure for breaking complex tasks into achievable steps, allowing models to reason about order, constraints and dependencies in a way that raw motor outputs simply cannot.

More importantly, explicit planning layers begin to answer one of the hardest questions in robotics: how to build systems that are both general and controllable. Planning tokens expose the model’s internal reasoning process, offering developers a handle on why a robot is doing something, not just what it is doing. This transparency is critical in environments where safety, verification and predictability matter.

Another advantage of using Chain-of-Action is that it creates a natural interface for cross-embodiment transfer. When a model outputs a symbolic plan followed by embodiment-specific execution, you can swap the robot without retraining the reasoning component. It is the same logic that allows large language models to generalise across tasks: abstract first, specialise later.

AI2’s Molmo-Act, Google DeepMind’s Gemini-ER and Sereact’s Cortex illustrate this trajectory in different ways. Molmo-Act uses language-conditioned reasoning steps to break down manipulation tasks. Gemini-ER enriches spatial and geometric understanding before delegating control. Cortex uses plan tokens to bridge a VLM-based perception stack with a high-frequency Motion Policy Expert.

Planning layers are still an emerging design choice, but they are exciting because they unlock something robotics has historically lacked: a modular, interpretable and reusable interface between understanding and action.

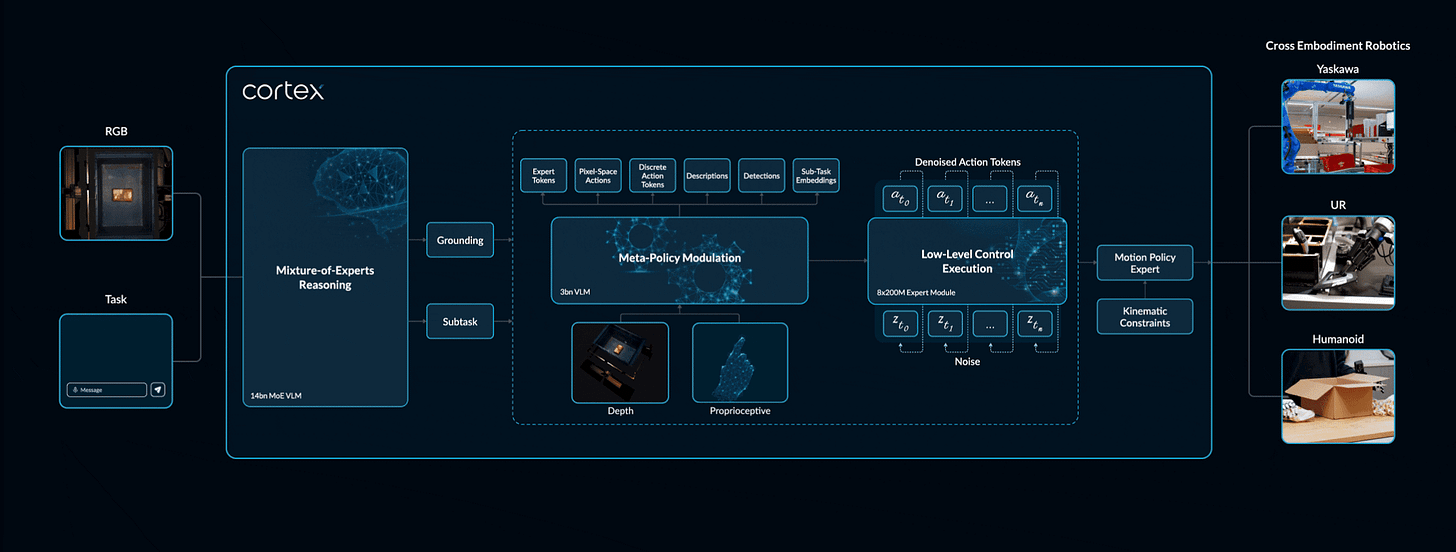

Sereact Cortex: real-world embodied AI in warehouses

Sereact is a Stuttgart/Boston‑based robotics company building embodied AI systems to automate warehouse picking. Its Cortex platform is an example of the modern embodied‑AI stack in action: a unified perception-planning-control system designed to operate under real‑world variability.

Sereact is deploying Cortex across Rohlik Group’s Knuspr and Gurkerl operations - part of a European e‑grocery leader serving more than one million customers and exceeding €1 billion in annual revenue. The rollout starts with 24 robots in Berlin and Vienna and expands toward 100+ systems across DACH. These robots run inside Rohlik’s Veloq fulfilment platform, working reliably in both chilled and ambient zones where traditional automation typically fails. Every action of every robot across the diverse fleet is captured and used as real-world training data to rapidly make Cortex smarter and smarter in a way that simulation and teleoperations cannot.

Cortex works by tokenising both perception and action. A dedicated VLM (“Lens”) interprets scenes and affordances; a planning layer converts these into discrete plan tokens describing the next sub‑steps of the task; and a Motion Policy Expert executes them as smooth, robot‑specific trajectories. This separation of understanding, planning and execution enables the same model family to generalise across arms, grippers and warehouse layouts while remaining debuggable in production.

At a technical level, Cortex compresses multi‑view RGB‑D inputs and deployment logs into discrete video tokens, and discretises short motion primitives into action tokens. The core model consumes text, image, video and action tokens in a single sequence, pretrained on mixed datasets and then finetuned with a continuous‑control expert using trajectory‑matching. A lightweight safety layer enforces limits and intervenes when uncertainty rises.

Wayve: Generalising public road autonomy

Outside of warehouse environments, London/SF-based Wayve is demonstrating what embodied AI generalisation looks like on public roads. Here, a single end-to-end driving model operated in 90 cities in 90 days, across Europe, North America and Asia - with no HD maps, no geofencing and no city-specific tuning. Importantly, 62% of the cities were entirely unseen and 14% of cities had zero prior data. This system logged more than 10,000 hours of AI driving.

Conditions spanned Tokyo’s narrow streets, Alpine terrains, dense European capitals, night driving, heavy rain and complex road geometry. The now-widely shared Tokyo sequence under typhoon conditions captures this vividly.

Wayve’s driving system offers some of the best empirical evidence to date that a single embodied model can handle diverse environments without retraining. No simulation benchmark comes close in terms of real-world diversity.

Humanoids are showing rapid progress

Humanoid robots have surged in investment, capability and architectural sophistication. NVIDIA’s GR00T N1 shows that modern multimodal representations and learned control stacks can operate increasingly complex bodies. Recent home robot prototype launches reinforce this momentum. Sunday Robotics debuted a home-focused humanoid designed for repeatable manipulation tasks, positioning itself explicitly as a pragmatic, deployment-driven alternative to more speculative projects. Meanwhile, 1X introduced its NEO platform with striking demos, though independent analysis indicates that much of the behaviour was teleoperated rather than autonomous, highlighting how uneven real capability remains across the category.

Despite this activity, there is still no equivalent of Wayve’s 90-city tour or Cortex’s multi‑site warehouse deployments in the humanoid space. Hardware reliability, safety certification and fleet‑scale data remain major constraints. As a result, the domain closely resembles autonomous driving circa 2017 - an ambitious technological trajectory, but a long road to safe, scaled deployment.

Into the next 12 months

Embodied AI is entering a phase where the question shifts from “can these systems work?” to “can they scale in real-world deployments?” The core ingredients are now in place: world models that provide increasingly realistic training grounds, VLAMs that unify perception and control under shared representations, and planning layers that expose a robot’s reasoning in a way that is inspectable and steerable. With Cortex operating in real warehouses and Wayve demonstrating continent‑scale generalisation on public roads, the gap between research prototypes and deployed systems is beginning to narrow.

Wayve is probably your best investment yet has a VC.

Excellent synthesis of where the field stands. The Chain-of-Action framing is probly the most underrated development here, basically solving the interpretability problem that's plagued end-to-end robotics for years. Having those intermediate plan tokens means you can actully debug why a system fails instead of just watching it fail mysteriously. The Wayve 90-city deployement with 62% unseen cities is wild evidence for generaliztion. Reminds me how much real-world variance matters compared to sim benchmarks.