Written by Max Cutler, independent writer, and edited by Nathan Benaich.

Breathless headlines around the geopolitical implications of AI dominate our newsfeeds: “AI Arms Race”, “AI War Heats Up”, “AI Doomers Versus AI Accelerationists Locked In Battle For Future Of Humanity”. But where’s the European Union in all of this?

We’ll give it to you straight: it’s quite likely Europe's ambitious regulatory initiatives will be obsolete before they're even fully enacted.

The European Union appears to be operating on an entirely different timeline, one where AI's risks warrant methodical, unhurried deliberation rather than focused enablement.

The world moves on while the EU (waits to) AI Act

The groundwork for the EU AI Act was laid over four years ago when the European Commission published a proposal for "harmonised rules on artificial intelligence" in April 2021. It was then made law in August 2024.

In the technological equivalent of several geological eras, OpenAI has since shipped 14 distinct versions of ChatGPT. GPT-4, which thrust generative AI into the mainstream, wasn't even released until March 2023; almost two years after the proposal for legislation was published.

During this regulatory gestation period, AI startups have raised over $300 billion globally, while a basket of five prominent AI companies have added $4.5 trillion in enterprise value.

Meanwhile, Western narratives around AI safety have undergone a spectacular pivot. Once dominated by philosophers and futurists warning of superintelligent machines that might view humanity as dispensable (think Skynet), today's conversation has, almost overnight, become simultaneously accelerationist and defanged.

The EU is crafting regulation at a snail’s pace, ostensibly oblivious to the technological renaissance erupting around them. While the capabilities of models and applications are evolving exponentially, bureaucrats are seemingly focused on trying to grasp an ever fleeting ‘just past’.

Put simply, the Act was born in a world before GPT-4, and will take effect in a world shaped by GPT-7. Let’s consider a live example. Matthias Fuchs is the founder of Tiger Cow Studios, a Berlin-based AI automation agency working with clients across German industry. He describes how regulatory uncertainty is blocking new development:

"Roughly 60% of the AI prototypes we’ve built for German Mittelstand firms have never left the lab. Potential clients err on the side of caution and completely ban LLMs like ChatGPT, due to uncertainties around EU AI Act compliance. When rules and regulations stop experiments in their infancy, firms miss out on learning loops and see their competitive edges wither."

The fundamental question has become unavoidable: is the EU AI Act a masterclass in regulatory obsolescence—carefully mapping a territory that will have transformed beyond recognition before the cartography is complete?

Let’s dive in.

The EU AI Act: A beautiful architecture but is it buildable?

The Act introduces a sophisticated, four-tier risk classification system:

Prohibited AI Practices: Systems deemed unacceptably harmful are banned outright, including social scoring systems and most real-time biometric identification in public spaces

High-Risk AI Systems: Applications in sensitive domains like healthcare face extensive requirements

Limited Risk AI Systems: Systems with moderate transparency concerns must meet disclosure requirements

Minimal Risk AI Systems: All other AI applications remain largely unregulated

This meticulous classification is overseen by a multi-level governance Frankenstein that would make Mary Shelley proud. At the European supranational level, the AI Office serves as the central coordinator while the European AI Board is meant to ensure harmonized application.

A scientific panel of independent experts hovers nearby, tasked with providing technical opinions on model risks and capabilities; presumably when they're not engaged in academic turf wars.

Completing this triumvirate is an advisory forum where industry representatives, civil society organizations, and standards bodies can voice concerns that will likely be meticulously documented and then elegantly filed away.

Complicated enough? We feel you. So here’s a chart that illustrates the same:

Meanwhile, each member state (read: EU country) must establish its own implementation machinery, including market surveillance authorities and regulatory sandboxes. Here’s how this is supposed to work:

National competent authorities oversee market surveillance while notifying authorities evaluate conformity assessment bodies that, in turn, certify high-risk systems for compliance and user safety. These are complemented by a constellation of AI regulatory sandboxes where innovation supposedly thrives under controlled conditions.

Perhaps the most revealing aspect of the Act is the implementation timeline. While the EU AI Act formally entered into force in August 2024, full compliance for public authorities' high-risk systems isn't required until August 2030 – six years later, or almost ten years after the initial proposal. In technological terms, this is equivalent to regulating today's smartphones based on BlackBerry design principles.

Dr. Neo Christopher Chung, a Warsaw-based AI researcher and the founder of Obz AI by Alethia, reveals there is much to be read between the lines of the Act:

"Think tanks and research institutes that do AI security, safety, and governance are writing 'code of practice' documents based on the EU AI Act. This is the devil in the details of European policy making. The EU creates the high-level framework and then outsources its implementation to advisory organizations (more here, think European twists on Brookings) who shape how the guidelines are applied in practice. For now, most people are waiting and dragging their feet because no one has told them what to do and how to comply."

Almost one year after the Act’s ratification, four years since the proposal for legislation was first published, and probably less than 5% of what will ultimately be implemented exists, if that.

At the same time, the Commission has established ambitious benchmarks: 75% of EU enterprises adopting AI by 2030, doubling AI startups to 12,000, and reducing high-risk AI incidents by 65%. Yet, one wonders if these targets measure bureaucratic self-perpetuation rather than meaningful innovation.

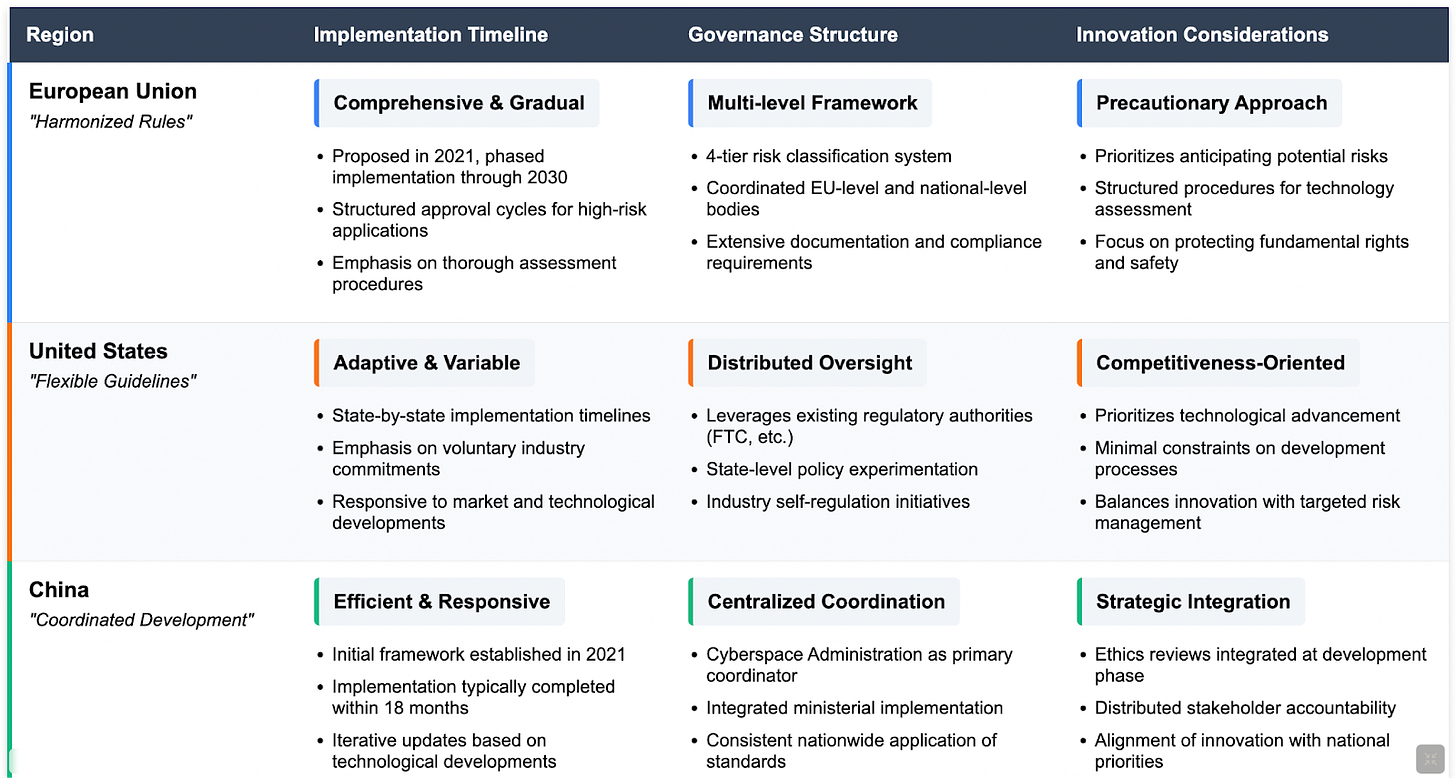

Maybe, though, we’re being too tough and the EU’s approach is just a reflection of what is simply realistic. Let’s look at other systems in an attempt to put Europe’s regulatory framework in context.

Competing Approaches: America and China are both playing to win, albeit with quite different systems

Under Trump's second administration, the regulatory focus is squarely on competitiveness with China, rather than safety guardrails like in Europe. Federal initiatives favor voluntary guidelines over comprehensive legislation, while states like Colorado and California have established their own "regulatory laboratories", in an attempt to conceive of a future national standard.

The US lacks a centralized governance structure ‘built for purpose’, operating instead through existing authorities like the Federal Trade Commission (FTC). This deliberate move reflects America's competitive ethos but could risk dangerous gaps in oversight.,

For now, it’s probably prudent to temper any rigorous analysis of the American regulatory landscape. In July of this year, “AI Czar” David Sacks and his team are expected to publish an ‘Artificial Intelligence Action Plan’ that will set out the regulatory objectives of the second Trump presidency.

China, meanwhile, has been implementing AI regulation since 2021, with rapid developments in the past 18 months. Their regulatory system assigns differentiated obligations across the AI ecosystem – from service providers to users – creating a shared responsibility model. Recent standards provide concrete technical requirements for security incident response, content filtering, and bias prevention.

Most notably, China's approach integrates ethics reviews at the development stage rather than focusing solely on deployed technologies. The Cyberspace Administration coordinates with six other ministries, creating a comprehensive regulatory ecosystem that balances innovation with state interests.

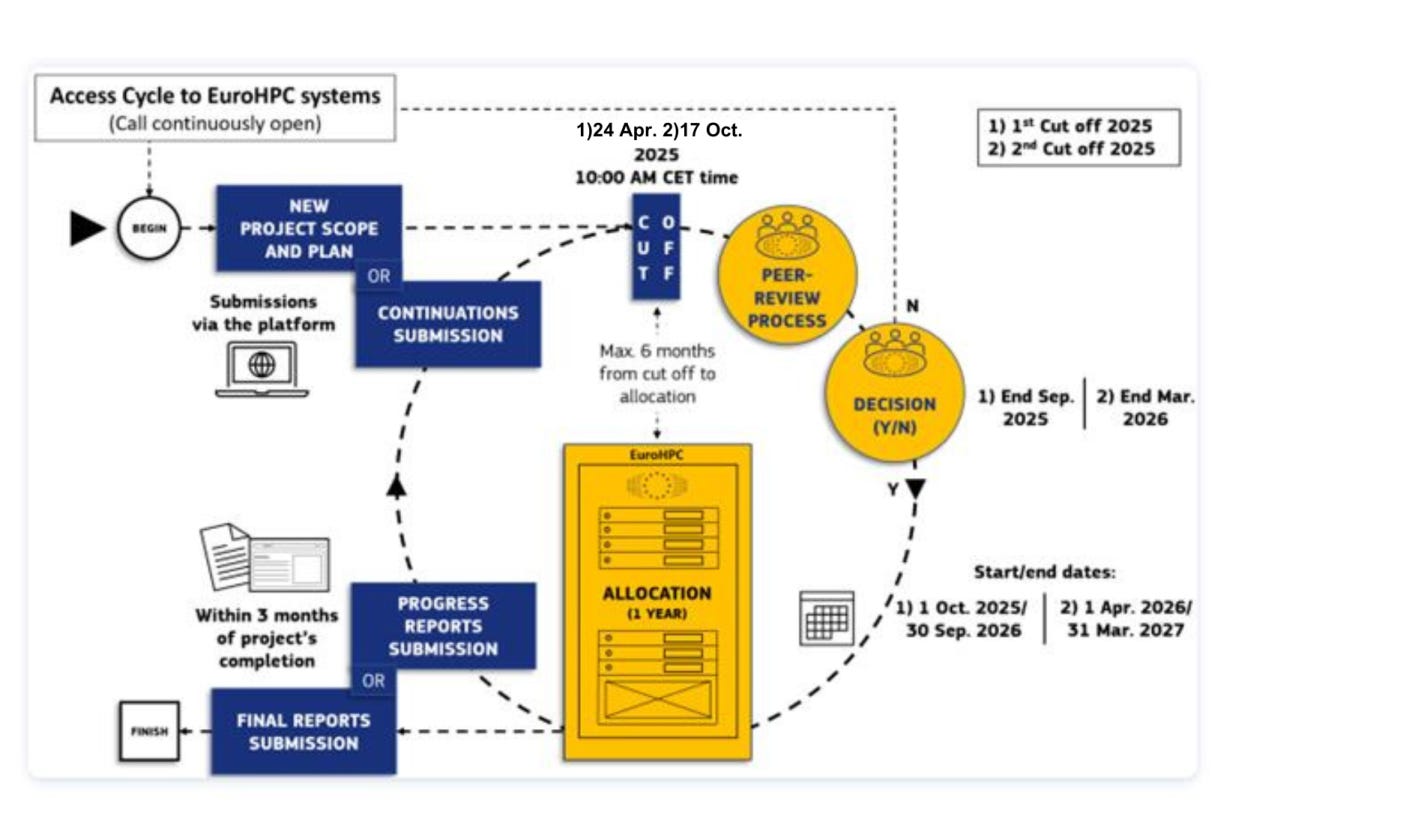

Case Study: EuroHPC's Bureaucratic Bottleneck

No example better illustrates Europe's regulatory folly than the European High Performance Computing Joint Undertaking (EuroHPC), established to deploy the continent’s supercomputing infrastructure.

Despite being impressive technology, accessing it requires navigating an administrative labyrinth. Researchers must provide extensive technical documentation, undergo multiple evaluation stages, and wait up to six months for approval (translate that to 3.5 years in ‘normal’ sectors). The process includes admin checks, technical assessments, peer review, committee evaluations, and final approval by the governing board – all before any actual computation begins.

Once approved, users face substantial reporting requirements documenting everything from energy consumption to resource utilization compared to plan. While China and the US race ahead with AI development, Europe's researchers are crushed by the weight of paperwork.

For time-sensitive AI research, such delays are tantamount to killing the golden goose before it’s hatched. During the waiting period, technologies evolve, research questions become outdated, and competitors (especially in other jurisdictions) accelerate. This administrative overhead diverts valuable researcher time away from actual innovation, undermining Europe's competitive position before the first training runs even begin.

A Common Sense Approach for Europe

Europe too often overthinks. If it wants to be the preferred destination for leading AI researchers and developers, it should simply allow top talent to work and focus on maintaining the high quality of life that attracts them in the first place. As the chronically online tech folks on Twitter/X say, “let the cracked AI engineers cook.”

Europe’s admirable regulatory intentions require urgent recalibration to ensure global competitiveness:

Streamline Administrative Processes: Significantly reduce bureaucratic burdens and shorten approval timelines to keep up with pace of AI innovation.

Centralized Authority: Empower an independent supranational body capable of swift and decisive regulatory implementation to prevent conflicting national approaches.

Incentive-Based Regulations: Shift regulatory strategies from restrictive compliance mandates to incentive-based mechanisms, fostering innovation and compliance through rewards rather than penalties.

Market-Driven Resource Management: Transition resource-intensive projects such as EuroHPC to private entities or strategic cloud partnerships, leveraging market-driven efficiency to optimize resource allocation.

Conclusion

Europe has the potential to lead in AI. Not through micromanagement, but by offering an environment where innovation flourishes alongside its genuinely commendable social system. Europe must focus on incentives (enablement) rather than restrictions (control). Europe can establish a regulatory approach that respects its values while ensuring it remains competitive with China and the US.

The EU AI Act, for all its architectural ambition, epitomizes Europe’s idealistic challenge. It attempts the holy grail of balancing an admirable commitment to ethical principles with the practical realities of a competitive technological world order. But if Europe fails to synchronize its regulatory tempo with the cadence of development, it risks becoming an elaborate bureaucratic archive. Carefully cataloging yesterday's innovations while the tools of tomorrow are built in Silicon Valley and Shenzhen, far from the land of the Enlightenment, is fit for a natural history museum, not the modern-day arena.

Sources

Article 5, EU AI Act. See also European Commission, "Guidelines on prohibited artificial intelligence practices established by Regulation (EU) 2024/1689 (AI Act)," issued February 4, 2025.

Article 5, EU AI Act. See also European Commission, "Guidelines on prohibited artificial intelligence practices established by Regulation (EU) 2024/1689 (AI Act)," issued February 4, 2025.

Articles 6-15, EU AI Act. For expanded analysis, see European Commission, "Impact Assessment Report on the AI Act," SWD(2021) 84 final, 21.4.2021.

Commission Decision (EU) 2024/1459 of 24 January 2024 establishing the European Artificial Intelligence Office, OJ L 42, 24.1.2024, pp. 32-38.

Articles 65-66, EU AI Act. See also European Commission, "Communication on the establishment of the European AI Board," COM(2025) 12 final, 15.1.2025.

Articles 57-59, EU AI Act. For implementation guidelines, see Commission Implementing Regulation (EU) 2025/XXX establishing detailed arrangements for AI regulatory sandboxes.

European Commission, “Third Draft of the General-Purpose AI Code of Practice published, written by independent experts”, March 11, 2025

European Commission, "Monitoring and Evaluation Framework for the AI Act," SWD(2024) 238 final, published alongside the final text of the AI Act, 13.6.2024

"Removing Barriers to American Leadership in Artificial Intelligence," The White House, January 23, 2025

"AI legislation in the US: A 2025 overview," Software Improvement Group, 2025, p. 8-9, 10-11

"AI Watch: Global regulatory tracker - United States," White & Case LLP, March 31, 2025, p. 7-8, 12

"Removing Barriers to American Leadership in Artificial Intelligence," The White House, January 23, 2025

“Tracing the Roots of China's AI Regulations”, Carnegie Endowment, February 2024

"China's New AI Regulations," Latham & Watkins, August 16, 2023, Number 3110, p. 4-5.

"Basic Requirements for the Security of Generative Artificial Intelligence Services," referenced in "AI Ethics: Overview (China)," China Law Vision, January 20, 2025.

"AI Watch: Global regulatory tracker - China," White & Case LLP, 31 March 2025.

EuroHPC JU, "Access Policy for the allocation of the Union's share of access time of the EuroHPC Joint Undertaking Supercomputers and AI Factories," Version 2025 04 09, p. 6-7.

EuroHPC JU, "Extreme Scale Access - Project Scope and Plan," 2025.

EuroHPC JU, "Extreme Scale Access - Full Call Details," 2025, p. 4-5.

EuroHPC JU, "Extreme Scale Access - Terms of Reference," 2025, p. 12.

EuroHPC JU, "Extreme Scale Access - Progress Report," 2025; "Extreme Scale Access - Final Report," 2025.

Hey guys, this sounds a bit too harsh. I mean, on one hand if the application of the AI act lags so much behind, why would a company bother waiting for enforcement before doing anything? To me it speaks more about lack of entrepreneurship than fear of regulations.

On the other hand, I am a bit confused about the bit on EuroHPC. I have been using LUMI, one of the two supercomputers currently available in the EU, since 2023, and I did the paperwork for a project in a couple of hours, receiving 200k GPU hours credits within 2 weeks (and keep in mind that I applied on my own, as a PhD with barely a publication at the time). Since then I think in my group we got 5/6 projects on LUMI, and we hadn't any reporting to do, just drop a line of acknowledgement in any publications using the compute from EuroHPC.

That’s a lot of referees guys-are there any players in this particular game?