The State of AI Report aims to provide a comprehensive overview of everything you need to know across AI research, industry, politics, and safety. To ensure the report remains at a manageable length, lots of material doesn’t make it into the final version. We’re bringing Air Street Press some of the research that didn’t make the original cut, along with our own reflections.

Introduction

Since Donald Trump secured his return to the White House a fortnight ago, we’ve been bombarded with questions about what this will mean for AI regulation. In the minds of certain commentators, when it comes to AI, there’s an almost cartoonish binary between a ‘responsible’ outgoing administration and an ‘anti-safety’ incoming one. We thought it might help to recap a few US political highlights from this year’s State of AI Report to place our views in context.

The twilight of AI safety?

As we alluded to in this year’s report - the partisan divides aren’t as stark as you’d think.

Underneath a lot of the rhetoric about fighting back against ‘woke AI’, we think Republican deregulation isn’t likely to be hugely radical for a few reasons.

For a start, it’s quite hard to take a radical deregulatory approach at a federal level in the US, as there’s … not all that much to dismantle. The requirements in the White House Executive Order largely focus on notification and primarily catch a small handful of labs.

The areas of Biden Administration policymaking that the Republicans seem most focused on are probably the ones that will make the least difference to AI labs. For example, Republican Senator Ted Cruz’s “no woke AI” amendment would ban the government promotion of ‘equitable’ AI development, but probably have next to no impact on industry at all.

Meanwhile, there’s little evidence that industry is pushing for the US AI Safety Institute to be dismantled. Sam Altman voluntarily announced that the US AISI would receive an early preview of major AI releases, while Anthropic and Google DeepMind have happily worked with the institute’s UK counterpart.

This is partly out of a sincere concern for safety. But it also makes commercial sense.

If you believe that you’re at the cutting edge of the most transformative technology the world has seen in decades, you will be aware of the acute political sensitivities. It makes a lot of sense to dip governments’ hands in the blood. If your country’s AI Safety Institute tested something before deployment and were satisfied, if a bad actor misuses your work, it’s harder to justify bringing the full force of the regulatory apparatus down on you.

It’s part of the reason that AI companies have been on a mission to make nice with the national security establishment. Whether it’s Meta changing Llama’s terms of service to allow defense use, Anthropic teaming up with Palantir, or OpenAI appointing a former NSA director to its Board - these companies want to be closer to the government.

It’s also worth remembering that while potential senior Trump advisor Elon Musk is tough on ‘woke’ - he’s been a consistent AI doomer. He was an early financial backer of DeepMind and OpenAI, because he worried about the technology’s potential to end the world, and he supported SB 1047 in California. He’s unlikely to want zero oversight. Meanwhile, Republicans have emerged as some of the most aggressive advocates of big tech regulation over the past few years, out of concerns around political bias.

Going local

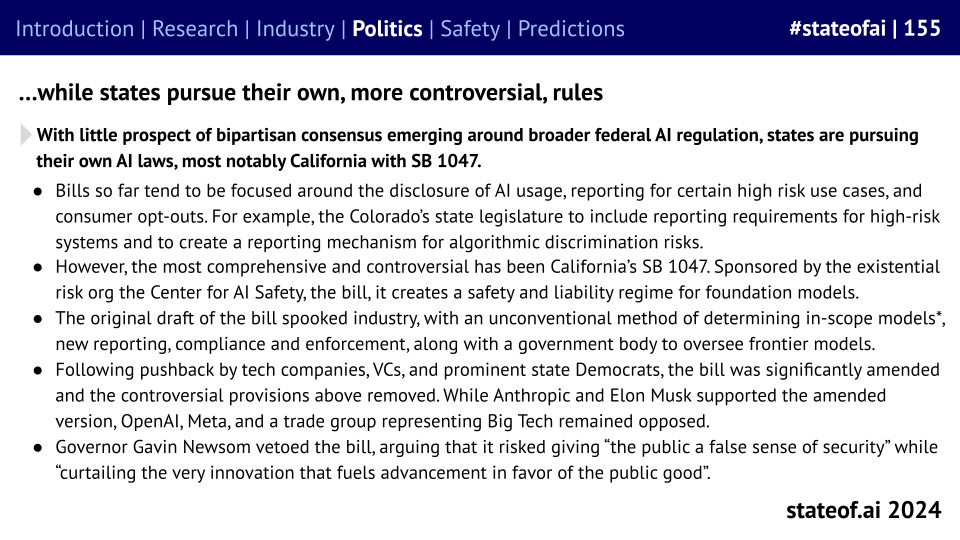

Nevertheless, the new administration means significant regulation at the federal level is unlikely … but that was already the case. The bipartisan Senate AI policy roadmap contains only narrow, loosely-worded ideas around regulation (e.g. “considering” a potential ban on social scoring or “considering” measures around transparency in AI healthcare provision).

Where we may see more change - and this is speculation at this stage - is at the state-level.

AI-related laws are on the statute book or being debated in almost every state right now, as tracked by Multistate. These range from relatively narrow laws prohibiting non-consensual sexual deepfake images and videos (e.g. in Idaho) through to more sweeping legislation around algorithmic bias and transparency. Colorado is a good example of the latter, and the governor only signed the proposals into law after publicly expressing his reservations.

It would not surprise us if perceived federal de-regulation led to more ambitious regulation by Democratic states. The rightness or wrongness of individual state regulations aside, business is rarely happy when it has to navigate vastly different regulatory regimes in different regions of the same country.

Beyond regulation

Domestic AI regulation aside, there are a number of other areas where we are likely to see some change.

While the CHIPS Act - the massive US attempt to onshore some of the semiconductor supply chain - is beginning to gain some traction, it always had its fair share of critics. Considering Trump’s protectionist instincts, we imagine it's the social provisions in the legislation that are likely to come under attack (e.g. labor union consultations, environmental impact measures etc.) rather than the principle of the Bill.

Potentially more interesting is the question of European decoupling.

In the report, we looked at how US companies were beginning to collide with European norms.

And this was causing product launches to either be canceled or delayed while local adaptations were made.

Considering the new administration’s more confrontational attitude on trade, will there be a penalty attached if the UK or EU decides to regulate big US companies in a heavy-handed way? If X is fined for breach of the Digital Services Act for its content moderation practices, there’s speculation that this could result in a diplomatic spat. This is unknowable at this stage, and even if it were, how much credibility would a political bloc be able to maintain in the eyes of the world if it began softening its approach to legislation or enforcement pre-emptively?

Closing thoughts

We’ve tried to avoid the kinds of overly-confident punditry that characterizes post-election speculation. But we believe that, considering its importance, it’s striking just how un-politicised many crucial questions around AI remain. Considering the personalities involved in the next administration, it may not stay that way for very long.

Nathan and Alex

Your article is clearly left, liberal biased.

If you think the Biden administration is "responsible" and the incoming Trump administration is "anti-safety" you are woefully uninformed.

Trump will help the entire country including those who did not vote for him. AI will continue to advance under Trump and do so in a fair. Safe and equatibal way.

Tom McShane