A decade is a familiar yardstick for measuring progress. In that time, we tend to expect steady, incremental change. But when it comes to AI, however, the last ten years has been a story of "gradually, then suddenly". The weekly model launches we are now accustomed to feel incremental in the heat of the moment, but a ten-year look back reveals a landscape transformed by something that feels closer to magic.

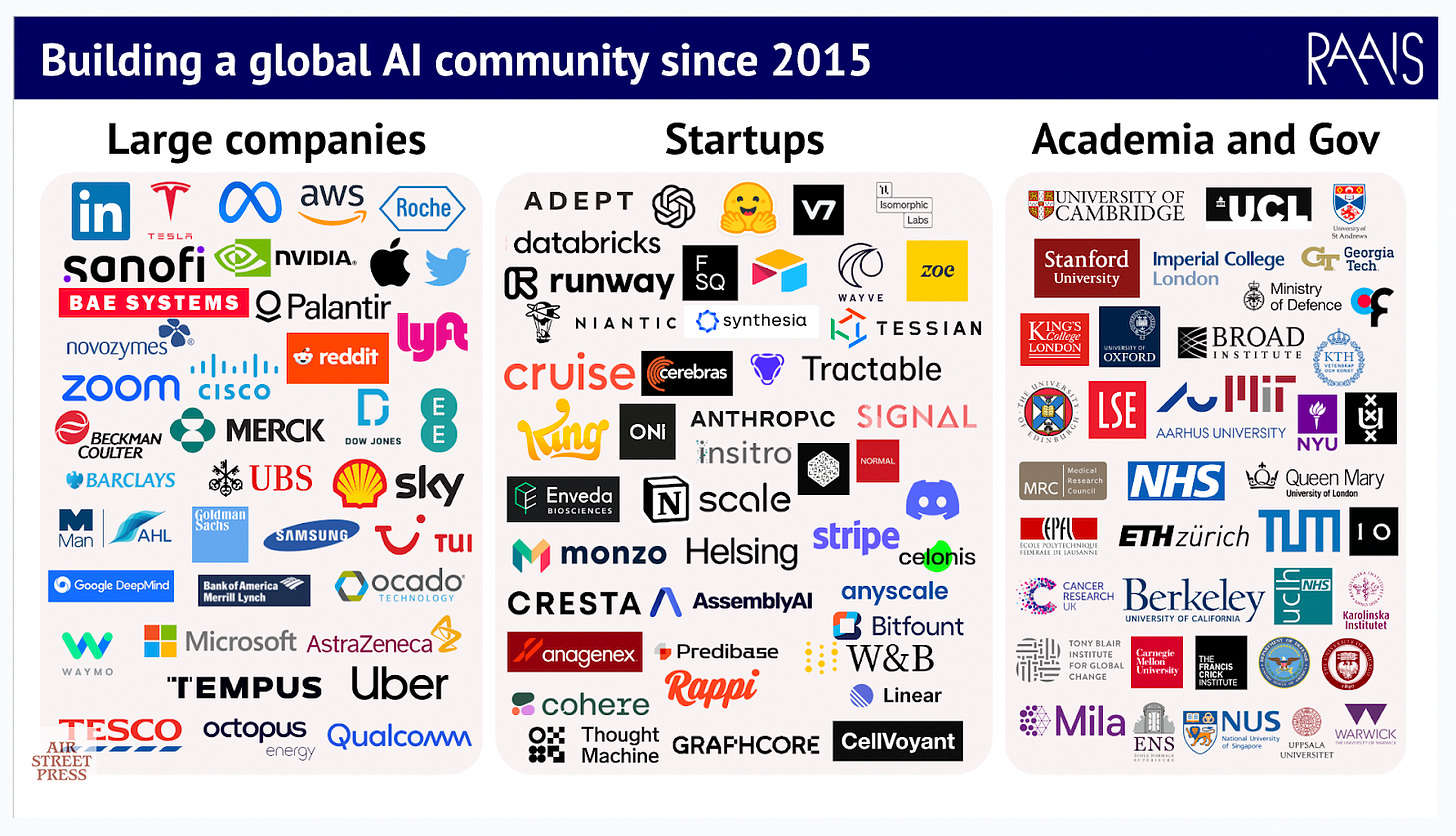

At our 9th Research and Applied AI Summit (RAAIS) on 13 June 2025, we brought together the people building the next decade. Researchers, founders, and policymakers who have gone from writing foundational papers to deploying infrastructure and policies at global scale. And if there’s one thing they agree on, it’s this: ten years in, we are still at the beginning.

Here is my opening talk and the narrative I shared in essay form for you below:

Scaling laws as the engine of progress

The story of modern AI is the story of scale and architecture breakthroughs. In 2015, Baidu’s DeepSpeech 2 showed that deep learning could crack speech recognition using distributed training across dozens of GPUs. That work, featuring a now-legendary list of authors who've gone on to found Anthropic and lead AI at NVIDIA, quietly forecast what was to come.

At RAAIS 2019, Ashish Vaswani (co-author of the original Transformer paper) delivered a prescient talk on this very theme, highlighting how scaling up attention-based models would unlock qualitatively new capabilities beyond natural language. His message was clear even then: scale wasn't just a tool, it was the roadmap. The more data, compute, and model parameters you throw at a problem, the better a model can solve it. Predictably.

By 2020, OpenAI’s scaling laws made that intuition a science and what was once experimental is now engineering doctrine. From 32 GPUs (once a serious research cluster) to clusters the size of power stations, AI’s scaling curve is now underwritten by some of the largest capital deployments in history. Scaling laws aren’t just a technical insight, they’ve become a national investment thesis.

And it’s no longer just about natural language, audio and video. These dynamics now power breakthroughs in other domains like protein design (Profluent) and self-driving (watch my fireside with Wayve CEO, Alex Kendall, from 2024).

Vibe Shifts: AI as strategy, not just software

As the utility of AI scaled, so did the stakes. And then came unmistakable vibe shifts.

AI in defense? Once so taboo that the backlash to Project Maven at Google nearly derailed its deployment. Today, AI is central to Western defense strategy. The EU’s €800B rearmament plan explicitly links military readiness to technological sovereignty.

Chinese AI? Once dismissed as derivative and second-rate. Then DeepSeek dropped and Western markets panicked over a misunderstood paper. From Llama copycat to state-of-the-art, the narrative flipped overnight. The Chinese AI community is now most definitely considered to be "cooking".

And existential risk? Once the dominant frame with CEOs of major AI labs testifying in Washington about extinction-level threats in 2023. But today's billboard war in SF and Paris tells a new story: "Plz use my app." The long-term fear hasn’t disappeared, but commercial urgency is now firmly in the driving seat.

From grainy images to agents and generative worlds

This edition of RAAIS showed that AI progress isn’t just measurable: it’s cinematic, embodied, and increasingly interactive.

In 2014, image generation struggled to depict a legible [insert your favorite animal].

Today, Synthesia avatars serve 60,000 business customers (watch Synthesia’s CTO at RAAIS 2024). Google’s Veo 3 generates short, controllable cinematic video with high-fidelity audio. Over the last two years alone, ElevenLabs users generated over 1,000 years of audio content.

Robotics? Once abandoned by OpenAI, the field is now proclaimed by Jensen Huang as the next scaling frontier. And the results are showing: Wayve’s self-driving car system recently drove through 90 cities in 90 days using its single model.

The very business of creating software is changing too. Five years ago, GPT-3 could complete prompts, translate languages, and write rudimentary essays, but it struggled with logic, reasoning, and consistency. It was a glimpse of potential, not a general-purpose tool. Today, agents scaffold production-ready apps, navigate and reason through global finance data, and orchestrate complex workflows in customer support.

The RAAIS community

The most powerful ingredient to AI progress is its people. For nearly a decade, RAAIS has been building community in a way that prioritises authenticity and science over hype and vibes. We focus on the quality of interactions: conversations that accelerate careers, collaborations that lead to real progress, and shared moments that shape best practices.

RAAIS is a meeting of researchers, founders, and operators who show up for each other, year after year to pay progress forward. And thanks to the support of our community, the RAAIS Foundation funds open science and education in AI.

Into the next decade

The last ten years unfolded “gradually, then suddenly.” What felt incremental now defines a new era of magical technology. The next ten years won’t just be faster, they’ll be more ambitious, more impactful, and more meaningful. If the first decade taught us anything, it’s this: we’re still at the starting line.

And RAAIS will continue to be where the next generation of ideas, collaborators, and breakthroughs first meet.