Introduction

In September, Elon Musk’s xAI unveiled Colossus, a compute cluster that is set to use 100,000 NVIDIA H100s. The “gigafactory” of compute will be the largest single AI supercomputer in the world, for now.

This cluster is located in Memphis, Tennessee and has sparked significant local controversy. Negotiated with local officials at record pace and minimal transparency, there are concerns about the impact it will have on the local power grid.

The cluster will start by drawing on 50MW of electricity (roughly the amount used to power 50,000 homes) with plans to triple this. Separately, Memphis has previously told its residents to reduce electricity consumption or risk blackouts. For the avoidance of doubt, the energy is likely produced by gas generators, rather than renewable sources.

We don’t have any objection to xAI’s Tennessee cluster. Instead, we think this story illustrates a few crucial themes: AI’s growing energy demands, the challenges of big tech’s sustainability commitments, and the poor state of grid infrastructure. If unresolved, these challenges risk handing a lead to the West’s adversaries or drawing us into a dependence on Gulf states.

We don’t believe the answer is to slow down progress and to embrace de-growther pseudoscience. We outline the challenge, why we believe it’s solvable, and some first steps governments should take as a matter of urgency.

Growing pains

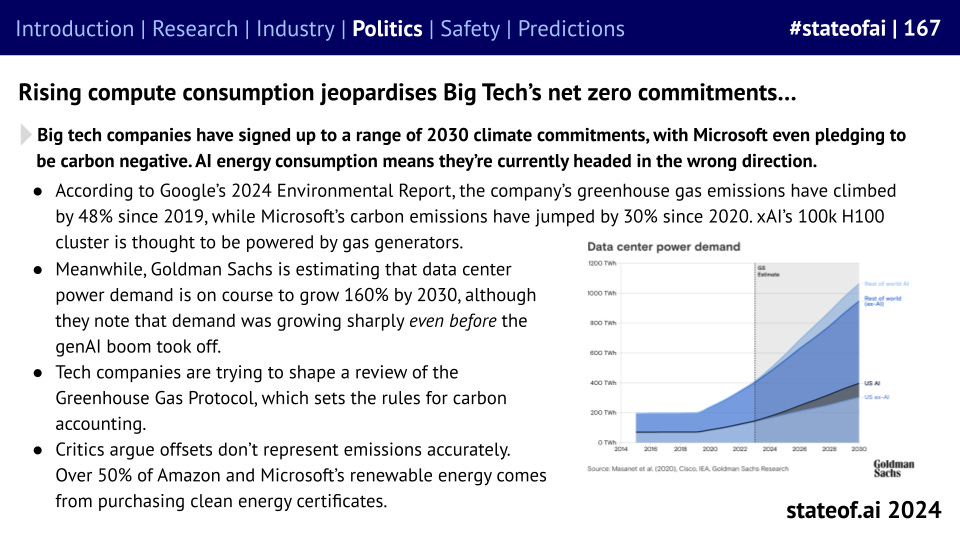

This year’s State of AI Report covered AI’s growing power demands, with data center power demand growing at an eye-watering rate in the next few years.

It increasingly looks as though every big tech company is set to miss its 2030 carbon emissions targets - spawning a slightly distasteful effort to rewrite the rules on what constitutes net zero.

We’re now at the stage where tech companies are planning data centers that would consume the output of some of the world’s largest nuclear facilities.

So far, companies have muddled through via a patchwork of renewables and by keeping old coal plants in business. But is there a sustainable long-term solution?

Uranium fever

We’ve recently seen a run of nuclear-related announcements from tech companies. Microsoft announced a power deal with Constellation Energy to restore a unit of the Three Mile Island nuclear plant in Pennsylvania. Restarting the unit will cost Constellation around $1.6B. Meanwhile, Google has struck the world’s first corporate deal to buy the output of small modular reactors (SMRs). Amazon recently bought a nuclear-powered data center from Talen Energy. Some of the world’s largest private equity managers are now reportedly looking at deploying capital into nuclear investments, including Carlyle Group, Brookfield Asset Management, and Apollo Global Management.

We believe that nuclear power will almost certainly be a significant part of the solution, but we’re concerned about the speed at which it’ll deliver on AI’s immediate energy needs.

To take a few of these initiatives in turn. With Three Mile Island, restarting a dormant nuclear facility is really hard, due to both regulatory hurdles and the inevitable degradation that will have occurred during the shut-off period. This means it will not be online until 2028 at the earliest. Based on the plant’s output in its last year of operation, it should provide 835MW of power. That’s great! But, compare it to the numbers above…

While the Constellation/Microsoft program will take time, it’s at least based on known, predictable technology. The same thing can’t be said about SMRs.

SMRs have been popular for a while in policy circles. They're seen as a way of accessing cheap, sustainable energy without building large, expensive traditional nuclear facilities that scare both treasury departments and local communities. The idea behind SMRs is that advances in materials, manufacturing, and our ability to model physics and hydraulics mean that it's now possible to provide cheap nuclear at scale via a network of smaller facilities.

Modern computational fluid dynamics allows engineers to model coolant behavior with unprecedented precision, while new materials like accident-tolerant fuels and advanced steel alloys enhance both safety margins and operational efficiency. These reactors often employ passive safety systems that leverage natural circulation and gravity-driven cooling, eliminating the need for complex emergency power systems that traditional plants require.

In the SMR future, individual SMRs will be factory-built and transported to sites, using standardized production methods adapted from advanced manufacturing sectors, like aerospace. The modular design typically involves a compact reactor core housed within a containment vessel, with standardized interfaces for cooling systems and power generation equipment. More units can be added incrementally as needed.

While an SMR may only produce 50-300 MW of electricity (versus 1GW for a traditional nuclear power plant), the ease of building new facilities will make up for it. Many designs feature integral configurations where the steam generators, pressurizer, and primary coolant pumps are all housed within the reactor vessel itself, significantly reducing the complexity of installation and maintenance while improving safety through reduced piping and fewer potential leak points.

This is all great, in theory. There is only one commercially deployed SNR anywhere in the world right now - Akademik Lomonosov, a floating reactor operated Russian state nuclear power company, which generates (a small amount of) power for remote areas in the Arctic. The other country in the early stages of piloting the technology is China.

But elsewhere, progress is slugging. NuScale, the only SNR provider with a licensed design in the US had to cancel its milestone project due to lack of commercial interest. This was in part due to economics, with the output forecast to cost meaningfully more than either standard nuclear or renewables.

SNRs’ smallness also presents a disadvantage.

The power output of a reactor decreases over time, causing revenue to fall, but historic construction and current maintenance costs obviously … don’t. This means that per MW of energy, SNRs are more expensive. It’s been largely forgotten now, but the US did historically have small nuclear facilities, like the La Crosse Boiling Water Reactor in Wisconsin, Elk River in Minnesota, and Big Rock Point in Michigan. These all closed because the overheads, plus cheaper alternatives, made them economically unviable.

So, all that means we probably can’t confidently bet on SNRs coming to our rescue. And even if they could, it’s unlikely to happen this side of 2030.

Let there be light?

If we want to avoid some of the nuclear-specific challenges, could greater build out of renewables be an answer?

While we’re not doubting their potential contribution to a country’s energy mix, we’re not convinced that they’re a compelling solution to AI-driven energy demand.

Data centers require 24/7 power. While you could theoretically resolve some of the issues around intermittency via batteries, considering the limitations of battery storage systems, it’s not quite this simple.

Firstly, to meet the power demands we’re describing above - a solar array would have to be substantial, considering panels are often only about 20% efficient. We’d then need a monster battery system. The largest battery storage system anywhere in the world currently holds 750MW and most storage systems are smaller. We’d then also need to account for significant degradation over time.

Then throw in a degree of geopolitical risk. Solar energy storage systems typically rely on lithium-ion batteries - overwhelmingly supplied by China.

It’s the infrastructure, stupid

When it comes to AI and energy - we need to consider both generation and transmission. While new nuclear power coming online may help solve generation, the infrastructure that then moves that energy to where it’s needed has seen better days.

As we mentioned earlier, Memphis was already at risk of facing blackouts. Meanwhile, Amazon saw its proposal to increase the power supply from Pennsylvania's Susquehanna nuclear plant blocked by the US Federal Energy Regulatory Commission over concerns that it threatened grid reliability.

But the US electricity grid isn’t a perfectly unified system that spans every region, with long-distance, high capacity lines. It was originally built out decades ago, by thousands of local companies largely acting in isolation from each other. While the industry has now consolidated into a handful of regional grid operators, it still has its eccentricities. For example, Texas’ power grid is isolated from the rest of the US.

Much of this infrastructure is also beginning to show its age. Grid reliability has been in decline since the mid-2010s, as infrastructure built in the 1960s and 1970s nears the end of its 50-80 year life cycles.

This is already beginning to have an impact on providers of AI-related infrastructure.

In some parts of the US, there are now multi-year queues before new data centers are connected to the grid. For example, in Loudoun Country, Virginia - known as “data center alley” - waiting times for projects can range from 2 to 4 years. In late 2022, before the full extent of the AI energy surge had started to hit, Dominion Energy filed a letter with regulators warning that there were 18 occasions in spring of that year alone, where customers had been at risk of rolling outages.

One way to resolve this is to expand and modernize power lines, which is usually paid for by upping everyone’s bills. A $5.2B plan to do this in Virginia was received … about as well as you’d expect.

If you think that’s bad

Things may not be great in the US, but as a net energy exporter, it can always keep the lights on. This may not always be very green, but it’s manageable in the short-term. We apologize to any environmental activists who might be reading this.

Over in the Old World, things are much worse. Over 60% of European energy needs are met by imports. Before 2022, Europe largely relied on Russia. This has now been replaced by a combination of Norway, the US, Saudi Arabia, and Qatar. This high dependency puts Europe at the mercy of changing (geo)political winds and exposes the continent to global price fluctuations.

Germany’s last three nuclear plans were closed down in April 2023. France is Europe’s nuclear powerhouse, but its facilities are beginning to show their age. At one point in 2022, half its reactors were shuttered for repairs and maintenance, while unplanned outages recently hit a 4-year high. At least the country has meaningful plans to increase capacity. The UK, meanwhile, is on course to import a record amount of electricity in 2024, surpassing its 2021 record by 50%. By 2028, the UK will be reduced to one functioning nuclear facility.

Along with weak generation, Europe also suffers from aging infrastructure. Ireland has followed the Netherlands in freezing data center construction over concerns about pressure on the grid.

New tech to the rescue?

There are a few tools and tricks that could potentially improve energy efficiency, but it’s unclear if they’ll be able to do this at anything approaching the necessary scale.

We occasionally hear talk of distributed training, along with rumors that Google partially employed it for Gemini Ultra’s pre-training. As the name suggests, distributed training means splitting up the task of model training across multiple computers or devices, rather than using a single machine. This is most commonly achieved via data parallelism, where each machine has a complete copy of the model. Training is then split between machines, with each machine processing different patches simultaneously, and the results periodically synchronized.

Smaller machines typically operate more efficiently at full load than large machines at partial load, while spreading heat generation across multiple locations reduces cooling requirements. The other advantage is that it allows for more flexible resource allocation. It becomes possible to power down unused nodes, schedule training during off-peak hours, and game renewable energy intermittency by distributing across different timezones and locations. And crucially, for the above, it might reduce the strain on local grids and abate some of the need for tech companies to move into the nuclear power game.

We’ve seen a few approaches to the task. In this year’s State of AI Report, we covered DiLoCo, an optimization algorithm designed to optimize the process:

While this work shows promise, it’s important to note that, to our knowledge, its effectiveness has not been demonstrated on models bigger than 1B parameters.

This is because distributed training, at the moment, is just very difficult at scale. It brings significant communications overheads and formidable coordination challenges. The resource utilization impact cuts both ways - it can sometimes optimize it or sometimes worsen it. Getting even utilization across nodes is difficult and can produce a ‘straggler effect’, where the slowest nodes hold the others back.

Another proposed technical fix lies in photonics, which involves encoding and transmitting data in light rather than through electric wires. While researchers have succeeded in building silicon waveguides for optical data transmission between chips that drive significant increases in energy efficiency, this work has never been deployed at scale due to challenges around size and temperature sensitivity. We should also be upfront about the potential energy savings here. Data movement and networking is a relatively small slice of an AI training setup’s energy consumption - very rarely more than about 10%. Even halving this or more will only move the needle so much,

Photonics doubtless will have a role to play. NVIDIA is investing in photonics start-ups, while TSMC has dedicated an R&D team to the task. However, the upfront investment and complexities in prototyping and the supply chain make it hard to see this technology being deployed at scale until well into the 2030s.

Consequences of inaction

While the West dithers on these questions, we can be sure that China isn’t. China is currently one of the world’s largest energy importers and is on a mission to change this.

The country fired up the world’s first fourth-generation nuclear power plant at the end of last year. Their use of more advanced cooling systems means they don’t have to be located near water sources. This puts the country 10-15 years ahead of the US in the deployment of fourth-generation reactor technology. And in August this year, the government approved an additional 11 new reactors. 45% of the nuclear facilities under construction globally are in China. Nearly every nuclear reactor project in China since 2010 has been completed in under 7 years - a rate no western country is close to matching.

As well as our adversaries upping their production, there’s another danger. Dependence. Microsoft is already partnering with G42 to build out data centers in the UAE, while a UAE sovereign wealth fund is partly fuelling Blackrock’s planned AI infrastructure fund. While this may be fine for the short-term, we should remember that the US isn’t the only power courting the Gulf. China is deepening its ties with the UAE and Saudi Arabia, while Chinese surveillance technology is widely used by the UAE’s authorities. Simply put, the US should be cautious about any strategically virtual technology becoming dependent on the capital and resources of a region whose leadership are largely fairweather friends.

Ways forward

On the bright side, these issues aren’t insurmountable. For example, the primary barrier to building new transmission lines and connecting generation capacity to the grid in the US is a ten-year permitting delay. There is bi-partisan interest in limiting the window for judicial review of permits, to make it easier to mobilize federal land, and to strip back unnecessary bureaucracy from the approvals process for grid projects.

There is likely cause for reviewing burdensome environmental regulation in some cases. Meta, recently, suffered a setback in its plans for a nuclear-powered AI data center, after it lost a fight with some rare bees. One of the reasons that Amazon wasn’t able to draw extra power for its nuclear data center was because sections of the energy industry were lobbying against the proposal. Some of the burden on the grid could be averted by embracing behind-the-meter generation, where companies procure electricity on site, rather than connecting to the grid. But that requires them to be able to build out generation capacity without each new facility taking in excess of a decade.

Over in the UK, policymakers could do worse than follow some of the recommendations in the viral Foundations essay that was published a few months ago. It documents how a broken planning system, local vetocracy and sprawling regulatory apparatus has reduced the UK to developing country-levels of per capita electricity generation.

In short, democratic nations need to take energy independence seriously, not treat it as a political slogan. Every fight with a local stakeholder averted and every decision delayed risks severe long-term consequences.

If you’re an entrepreneur working in the energy and AI space, get in touch, no matter how early your idea is.