Every month, we produce the Guide to AI, an editorialized roundup covering geopolitics, hardware, start-ups, research, and fundraising. But so much happens in the AI world, that weeks can feel like years. So on off-weeks for Guide to AI, we’ll be bringing you three things that grabbed our attention from the past few days…

Subscribe to Air Street Press so you don’t miss any of our writing - like this week’s deep dive into the world of biorisk.

1. Hello, human resources?!

The AI community on X is frequently a fractious place - with debates about politics, methodology, and model evaluation vibes. But one thing that unites everyone - from “Pause AI” through to e/acc - is dunking on Gary Marcus. But are we holding him to a double standard?

When Gary downplays the capabilities of LLMs, everyone rushes to remind him that he said deep learning was hitting a wall in … 2022.

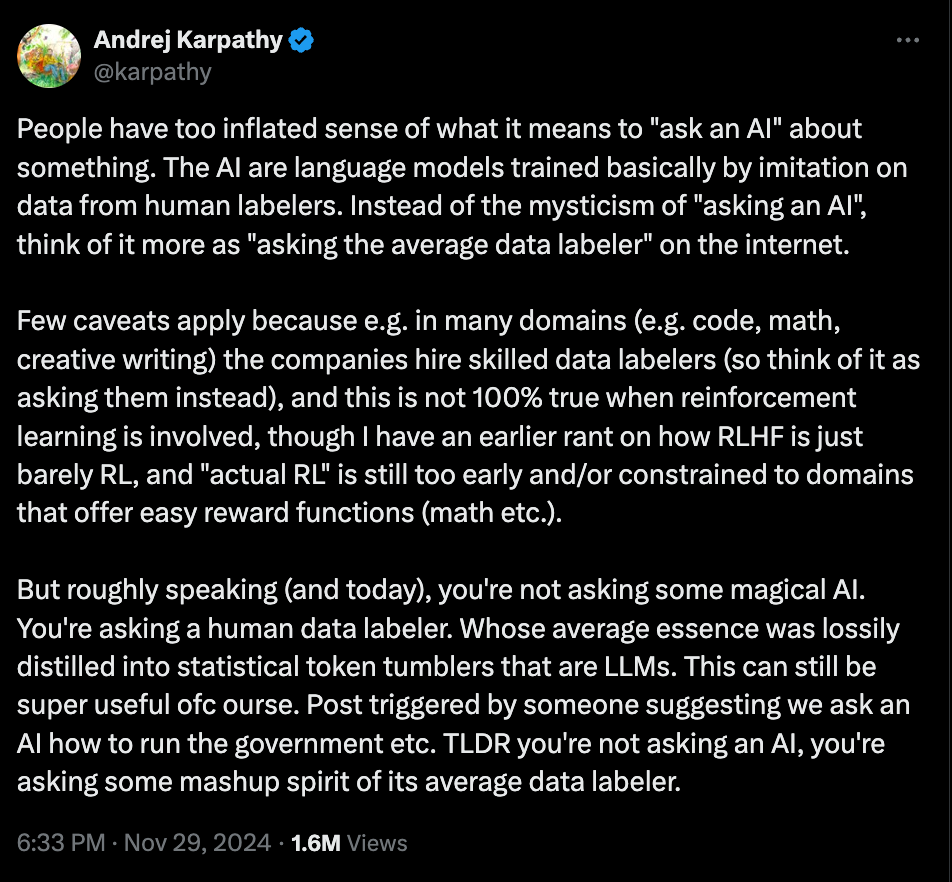

But when our friend Andrej Karpathy tweets the following, we applaud.

Palantir memorist Nabeel Quereshi summarised the situation memorably:

So are we being unfair?

Not really. For a start, Andrej isn’t going full stochastic parrot. He doesn’t suggest models are mechanically reproducing training data patterns - asking the data labellers acknowledges meaningful synthesis and recombination. And by stating the importance of domain expertise, he doesn’t treat all training data as equally random or meaningless.

You can suggest LLMs aren’t magic reasoning machines and command community respect if, like Andrej, you acknowledge they “can still be super useful”. Andrej also has a record of just … being right. While people like Gary were mocking the potential of transformers, he saw their potential as general purpose technology.

Reasoned scientific disagreement is distinct from pouring cold water on other people’s work and claiming it isn’t useful, when to anyone without a severe case of motivated reasoning, it obviously is.

Shots will continue to be fired in the reasoning wars, especially as the community gets its teeth stuck into the full-fat version of OpenAI’s o1. Nathan trialled it on some protein design-related tasks and it demonstrated impressive results (read the biodefense piece…)

We look forward to bringing you fresh news from the front.

2. Vibe shift: Cry havoc and let slip the dogs of war

OpenAI has become the latest frontier lab to embrace defense partnerships, striking a deal with Anduril.

On Wednesday, the two companies announced that they would be marrying OpenAI’s models with Anduril’s Lattice platform to support the synthesis of time-sensitive data in an anti-drone context. To support this work, OpenAI will have access to Anduril’s library of data on counterdrone operations.

This is just the latest step in OpenAI’s defense journey. The company has already appointed a former NSA director to its Board, struck commercial deals with the US military (quietly), and publicly affirmed its support for working on national security.

They’re not the only ones. Meta is changing Llama’s terms of service to allow defense use, we’re sure completely coincidentally after the Chinese military were revealed to have finetuned a version of Llama 2. Meanwhile, Anthropic has partnered with Palantir to support intelligence and defense operations.

This work is interesting for two reasons.

Firstly, on a technical level. Outside their use in some wargames and for summarization tasks, there currently isn’t a great deal of evidence around the effectiveness of LLMs in a national security context. Work is early, exploratory, and it’s unclear how militaries and defense ministries intend to reform or replace existing processes. Whether these partnerships are renewed or expanded might give us some clues.

Secondly, it’s a reminder of the journey the tech world has been on. Anthropic has gone from messianically evangelizing p(doom) to partnering with the military. Meanwhile, Sam Altman’s public embrace of American values, stands in contrast to the position he took last year, when he expressed hope that OpenAI could step out of values debates altogether, setting ‘very broad absolute rules’ and leaving people to finetune.

The successful movements in history welcome converts, they don’t look for traitors. So we welcome our frontier lab brothers and sisters to the mission. LFG.

3. Checkmate, stochastic parrots?

LLMs are surprisingly bad at chess. They struggle to model the state of the board consistently, gaslight you about the rules, and output illegal moves regularly.

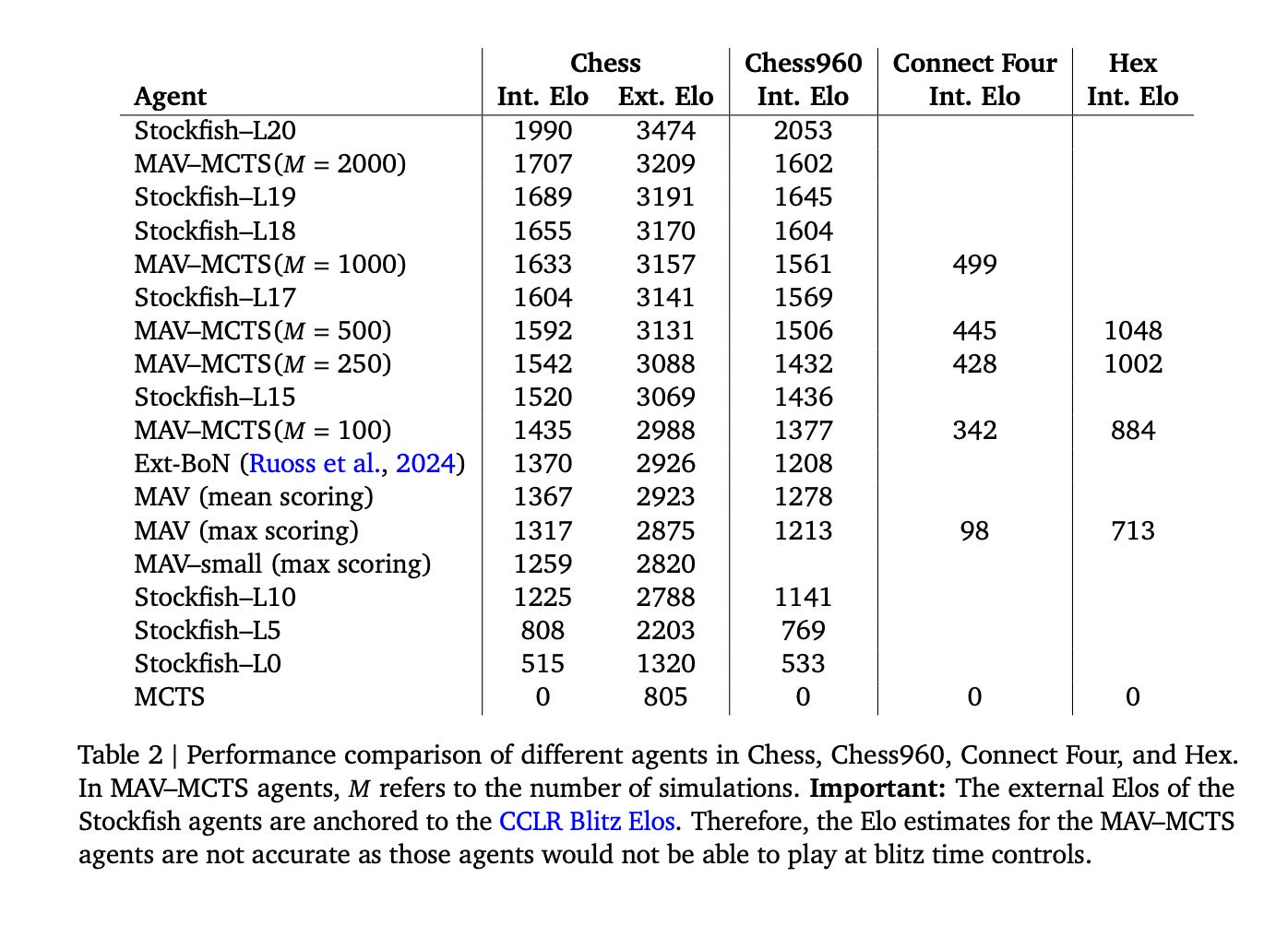

A Google DeepMind team has found that by combining LLMs and search-based planning, it’s possible to drive improvements. They try two different approaches: external search, where the model supports Monte Carlo Tree Search (MCTS), and internal search, where the search process is distilled directly into the LLM.

To be clear, this isn’t AlphaGo mark 2. While both approaches employ MCTS, the similarities stop there. AlphaGo combined a policy network to suggest moves and a value network to evaluate positions. It was then trained via reinforcement and supervised learning on expert game data. By contrast, this model uses a single transformer architecture pre-trained on text-based representations of games, without RL.

In both cases, hallucination was significantly reduced, while the model reached grandmaster-level play using external search, without having to burn through much compute.

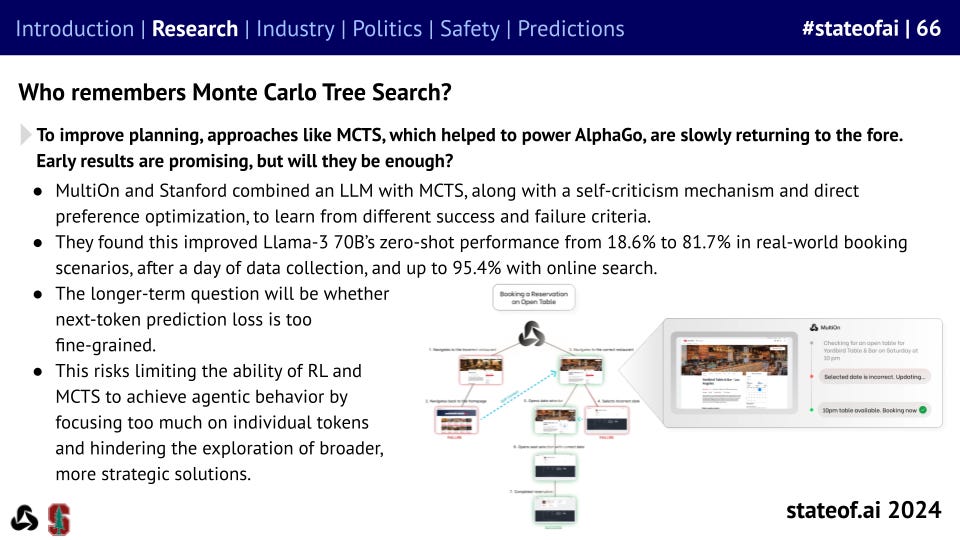

This ties into a wider trend we’ve seen, where combining LLMs with other approaches (like MCTS) can drive significant improvement on tasks that involve planning - a core component of reasoning. We covered this in the State of AI Report this year:

Beyond the closed world of board games, we examined some of these approaches as part of a longer read on open-endedness, a promising research direction that will be essential for unlocking the most economically useful applications of AI agents.

See you next week!