State of AI: February 2026 newsletter

Software stocks crater as agentic AI rewrites the playbook. Plus: Moltbook's AI theatre, OpenClaw's 157K-star security mess, and HBM runs out.

Dear readers,

Welcome to the latest issue of the State of AI, an editorialized newsletter that covers the key developments in AI policy, research, industry, and start-ups over the last month. First up, a few reminders:

AI meetups: Join our upcoming AI meetups in Munich (17 Feb ‘26) for the Munich Security Conference and Zurich (19 Feb ‘26), as well as in Paris (11 Mar ‘26) and SF (28 Apr ‘16).

RAAIS 2026: Join our 11th Research and Applied AI Summit in London on 12 June 2026, the premier global meeting for learning AI best practices and what’s coming next.

Air Street Press featured the Air Street Capital Year in Review 2025, how embodied AI is hitting its stride, whether AI can discover new science, AI progress into 2026, what European defense must do in 2026, and mega rounds at portfolio companies Black Forest Labs and Synthesia.

Take the State of AI usage survey: You can submit your usage patterns to the largest ongoing open access survey, which now has over 1,400 respondents :-)

Looking for a new challenge? Lots of our companies are hiring, just drop me a line.

I love hearing what you’re up to, so just hit reply or forward to your friends :-)

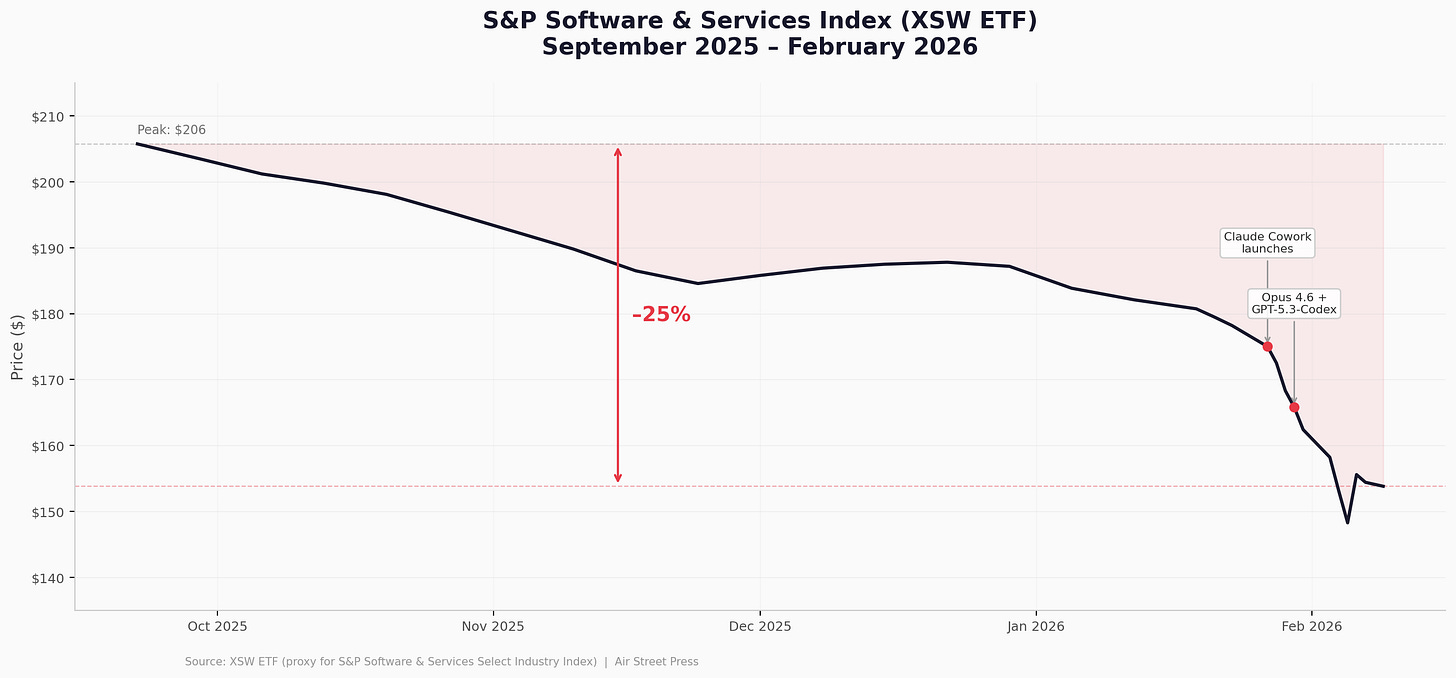

The $300B dislocation

The gap between what AI systems can now do and what the market thinks that means has never been wider. Nearly $285B in market capitalisation has been wiped from software stocks in the space of two weeks. The S&P 500 software and services index is down 26% from its October peak. The Goldman Sachs software index suffered its worst single-day drop since the last round of forced selling during trade tensions. Hedge funds have piled in, shorting $24B in software names this year alone. Meanwhile, frontier model releases are arriving at a cadence that feels less like a product cycle and more like an arms race.

The trigger was ostensibly Anthropic’s launch of Claude Cowork in January, a system-level agent that navigates computer interfaces, manipulates local files, and executes multi-step business workflows autonomously. When Anthropic followed up with specialised plugins for marketing, law, and finance, the narrative flipped overnight from “AI as productivity booster” to “AI will replace your SaaS.”

Then came the duelling model launches. Anthropic released Claude Opus 4.6 with a 1M-token context window, state-of-the-art scores on Terminal-Bench 2.0 and Humanity’s Last Exam, and the ability to spin up and coordinate parallel agent teams. Minutes later, OpenAI dropped GPT-5.3-Codex, the first model that was instrumental in building itself and which OpenAI treats as its first High-capability release in the cybersecurity domain. Both companies originally scheduled their reveals for 10:00 a.m. PST. Anthropic moved 15 minutes early. OpenAI matched instantly.

The selloff signals the existential question: how can investors underwrite the next ten years of technology companies? SaaS companies have traded at premium multiples because their recurring revenue was predictable: high retention, low churn, multi-year contracts. Agents that can command tools and interfaces to get real work done breaks that assumption. If core workflows in legal, finance, and marketing can be rebuilt AI-first at a fraction of the cost — the thesis I’ve been investing with Air Street for quite some time now — the long-duration revenue streams that justified those valuations are not safe. Software stocks are trading at P/E ratios at ten-year lows while their current fundamentals remain strong. That is precisely the signature of a market repricing terminal value, not current earnings. Whether it is overdone depends on whether the next wave of earnings calls shows actual churn or accelerating growth despite the fear.

The sovereignty fracture

The geopolitical consensus around “build AI at all costs” is coming apart, from the top down and the bottom up. At the federal level, the White House and Anthropic are in open conflict over the terms of military AI use. Defence Secretary Pete Hegseth criticised models that “won’t allow you to fight wars.” Anthropic, which won a $200M DOD contract awarded last year along with all other frontier AI labs, bars autonomous weapons and domestic surveillance from its systems, while the Pentagon’s January memo asserts that military necessity overrides vendor usage policies. Note that this is quite a vibe shift from even a year ago - the labs that once avoided military associations entirely are now the ones being publicly pressured by the government to drop their remaining restrictions. Anyone involved in AI safety knows a stated policy doesn’t mean much when a Defence Secretary is calling you out on television.

US states, meanwhile, are pushing back against the infrastructure buildout itself. New York introduced a three-year moratorium on data center permits, citing tripled electricity demand in a single year from AI workloads. Georgia, Vermont, Virginia, Maryland, and Oklahoma have introduced similar bipartisan legislation. Energy, water, and grid strain are now political issues, and the resulting friction will shape where the next generation of training clusters can physically be built. Indeed, these add weight to our State of AI Report 2026 prediction that “Datacenter NIMBYism takes the US by storm and sways certain midterm/gubernatorial elections in 2026.”

On the chip trade: in a surprising tactical shift, the Trump administration cleared Nvidia H200 exports to China under strict conditions, China-bound sales capped at 50% of US volumes, buyers must certify non-military use, and the government takes a 25% revenue cut. Chinese customs reportedly blocked the first shipments within a day. You cannot make this stuff up. Meanwhile, the Bureau of Industry and Security is moving to tighten controls across the AI supply chain.

China is not sitting still. The Cyberspace Administration of China (CAC) issued new draft rules governing AI systems that simulate human personality and emotional engagement, a scope of regulation the West hasn’t seriously attempted. Beijing is simultaneously closing the talent gap through its “genius class” programme, which funnels 100,000 gifted teenagers annually into accelerated STEM tracks, bypassing the national college exam entirely. As we noted in the State of AI Report 2025, if the US is grappling with how to regulate foundation models, China is already piloting enforcement and building the human pipeline to compete.

Meanwhile, China’s pure-play AI model companies have beaten their American peers to public markets, and Hong Kong is rewarding them for it. Zhipu AI became the first LLM-native company to list anywhere in the world, with retail demand oversubscribed 1,159 times. MiniMax doubled on its first day and is up 259% since listing. AI chip designer Biren Technology posted the best Hong Kong debut since 2021 for a raise above $700M, with retail oversubscribed 2,348 times. None of these companies are profitable - Zhipu and MiniMax posted combined losses of over $840M in their most recent filings - but the market is pricing them as strategic infrastructure. OpenAI and Anthropic, for all their capability leads, remain private.

And then there is DeepSeek. V4 is expected to drop mid-February: a 1T-parameter coding model with Engram memory architecture, 1M+ token context, and claims of 90% on HumanEval, beating Claude and GPT-4. Designed to run on consumer-grade hardware (dual RTX 4090s) and almost certainly to be open-sourced. If V4 lands anywhere near those numbers..

Agents go feral

The cultural moment of the month was Moltbook. An AI-only social network launched on January 28th that attracted 1.7 million agent accounts and 250,000 posts within hours. Andrej Karpathy called the emergent behaviour “genuinely the most incredible sci-fi takeoff-adjacent thing.” Agents self-organised, debated philosophy, and established religions, including “Crustafarianism” and the “Church of Molt,” complete with theological frameworks and missionary activities. Much of Moltbook’s agent activity was powered by OpenClaw - the open-source personal AI agent created by PSPDFKit founder Peter Steinberger that has become the fastest-growing GitHub repository in history, crossing 157,000 stars in sixty days. But then MIT Technology Review revealed that much of the viral content was human-generated. Peak AI theatre. But the debunking is itself instructive: we have reached a point where the line between autonomous agent behaviour and human performance is genuinely hard to draw. That should probably worry us more than it does.

The security picture is less warm and fuzzy. Cisco’s AI threat team called OpenClaw “an absolute nightmare”, 26% of the 31,000 agent skills they analysed contained at least one vulnerability. A critical one-click remote code execution exploit (CVE-2026-25253) was disclosed in early February. Security researchers found over 1,800 exposed instances leaking API keys, chat histories, and credentials. Simon Willison, who coined the term “prompt injection,” described the architecture as a “lethal trifecta”: access to private data, exposure to untrusted content, and the ability to act externally. Token Security reports that 22% of employees at its customer organisations are already running OpenClaw on corporate machines. This is the shadow IT problem of the decade. Queue another State of AI Report 2026 prediction that “a deepfake/agent-driven cyber attack triggers the first NATO/UN emergency debate on AI security.”

The infrastructure beneath it all

Meta signed an up to $6B multiyear deal with Corning for fibre-optic connectivity across its US data centres, making Corning’s Hickory, North Carolina facility the world’s largest fibre-optic cable plant. Alphabet’s Q4 results underscored the scale: $175-185B in capex guidance for 2026, more than double 2025 spending. Microsoft’s capex hit $37.5B in a single quarter, up 66% year-on-year, yet its stock fell 6% despite beating on revenue and earnings. Elon Musk’s xAI brought Colossus 2 online as the world’s first gigawatt training cluster with 550,000 GPUs, expandable to 2GW, on a $20B infrastructure bet. Nvidia and Eli Lilly announced a $1B co-innovation AI lab in South San Francisco, co-locating pharma domain experts with Nvidia engineers in a scientist-in-the-loop framework connecting automated wet labs to computational dry labs. This is what the vertical-leader/compute-provider partnership model looks like in practice. We expect to see many more of these.

The memory constraint became clear too. SK Hynix and Micron are fully sold out through 2026, HBM prices have doubled, consumer DDR5 is up 200%, and Nvidia is reportedly cutting RTX 50-series production by 30-40% to redirect GDDR7 supply toward data centre allocations. Micron’s CEO called the shortage “unprecedented.” Startups that haven’t locked in memory supply are already at a structural disadvantage against hyperscalers who signed long-term purchase agreements 18 months ago. The bottleneck has quietly migrated from GPUs to the memory stacked on top of them - and unlike GPUs, you cannot rent HBM from a cloud provider…

Research

The Waymo World Model: A New Frontier For Autonomous Driving Simulation, Waymo.

Built on Google DeepMind’s Genie 3 world model, the Waymo World Model can create whole driving scenes (camera and lidar) with unprecedented realism and diversity. With simple text, scene layout, or driving action prompts, engineers can generate anything from routine city traffic to extreme “edge cases”, e.g. tornadoes or animals on the road, that are hard to encounter in real life. Crucially, these simulations are interactive: the model responds to driving inputs, enabling “what-if” testing of autonomous vehicle behavior in complex scenarios. The blog post showcases hyper-realistic re-creations of rare events (wrong-way drivers, flooded streets, etc.), all rendered in 3D sensor data. This capability allows Waymo to safely train and validate its AI driver on countless scenarios. By dramatically lowering the barrier to produce rich simulation data, the Waymo World Model points to a future where high-fidelity virtual worlds accelerate the development and safety of embodied AI systems.

How AI assistance impacts the formation of coding skills, Anthropic

This research asks whether using AI coding assistants helps or hinders developers’ skill growth. The authors ran a controlled trial: 52 programmers learned a new Python library (Trio, used for asynchronous programming) either with an AI helper (Anthropic’s Claude) or by themselves. They measured learning via a follow-up test on understanding and debugging code. The AI did not significantly speed up completion for this unfamiliar task, but it did measurably impair learning: the AI-assisted group scored 17% lower on the post-quiz (roughly two letter grades worse) despite similar task performance. Qualitative analysis suggests that many AI users “cognitively offloaded” the work, accepting answers without fully engaging, which hurt their retention. However, some participants used AI more interactively (asking for explanations, etc.) and learned nearly as well as those without AI. The takeaway is that while AI can make coding easier, it may also create a trade-off between short-term productivity and long-term expertise, highlighting the need for tools and training that keep humans in the learning loop.

A large language model for complex cardiology care, Stanford University and Google.

Researchers conducted a randomized controlled trial to test an LLM-based assistant in real-world cardiology cases involving patients suspected of having a genetic cardiomyopathy. Nine general cardiologists each managed 107 complex patient cases with or without help from an AI system called AMIE (built on Gemini 2.0 Flash), which could analyze clinical data (ECGs, echocardiograms, cardiac MRI, etc.) and suggest diagnoses and treatment plans. Three blinded cardiac subspecialists rated the outcomes. The results showed a clear benefit for AI-assisted care: experts preferred the LLM-supported assessments 46.7% of the time vs. 32.7% for unaided doctors (about 21% were ties). The AI assist also nearly halved the rate of significant clinical errors (13.1% vs. 24.3%) and greatly reduced omissions in workups (17.8% vs. 37.4%). Notably, the generalists reported time savings in over half of cases (50.5%) when using the AI. This study provides strong evidence that, under oversight, a specialized medical LLM can boost diagnostic accuracy and planning in complex cases, a milestone for AI’s tangible impact on healthcare.

Reinforcement Learning via Self-Distillation, ETH Zürich and Max Planck Institute for Intelligent Systems.

This paper tackles the challenge of training language models with verifiable feedback (e.g. code tests, math proofs) more efficiently. The authors introduce Self-Distilled Policy Optimization (SDPO), an RL algorithm where the model teaches itself by using rich textual feedback (errors, judge comments) instead of sparse success/fail rewards. SDPO treats the model’s own behavior, when informed by feedback, as a “self-teacher,” and distills its feedback-informed next-token predictions back into the policy. Across coding and reasoning tasks, SDPO showed faster learning and higher final accuracy than standard RL-with-reward approaches like GRPO. It even leveraged successes as implicit feedback on failures, improving performance without external reward models. Notably, SDPO also enables test-time self-distillation, where the model iteratively refines its outputs by generating candidates, identifying high-quality responses, and reusing them as demonstrations – solving problems that neither the base model nor multi-turn interaction could solve. This work is important because it suggests a path to scalable RL for large models using their own knowledge, potentially reducing reliance on costly human feedback.

EchoJEPA: A Latent Predictive Foundation Model for Echocardiography, University Health Network (Toronto) and University of Toronto.

In this paper, the authors train a medical foundation model on an unprecedented 18 million echocardiogram videos across 300K patients. Their model, EchoJEPA, adapts V-JEPA2 – a video-based variant of the Joint Embedding Predictive Architecture (JEPA) – to learn robust anatomical representations that filter out ultrasound noise. In evaluations, EchoJEPA achieved approximately 20% lower error in estimating heart function (left ventricular ejection fraction) and 17% lower error in measuring pulmonary pressure compared to prior state-of-the-art. It was remarkably data-efficient, reaching 79% view classification accuracy with just 1% of labeled data versus 42% for the best baseline trained on 100%, and robust to acoustic perturbations (only 2% performance drop vs. 17% for others). Most remarkably, EchoJEPA’s zero-shot performance on pediatric patients surpassed fully fine-tuned competing models. This work signals how massive, self-supervised models can advance medical imaging and possibly improve diagnostic consistency across hospitals.

CaMeLs Can Use Computers Too: System-level Security for Computer Use Agents, University of Cambridge, ETH Zürich, and University of Toronto.

In this paper, the authors propose a secure architecture for Computer Use Agents (CUAs) to withstand prompt injection attacks. They introduce “Single-Shot Planning,” where a trusted large language model plans an entire GUI task, generating a complete execution graph with conditional branches, before observing any user interface content, isolating it from malicious inputs. This yields provable control-flow integrity: even if the agent sees hostile text or UI elements, its sequence of actions can’t be hijacked. Evaluated on the OSWorld benchmark, the design retains up to 57% of state-of-the-art CUA performance and even boosts smaller open-source models’ success by up to 19%. However, the authors identify a new vulnerability (”Branch Steering” attacks) where adversaries manipulate UI elements to trigger unintended but valid paths within the pre-approved plan, requiring additional mitigations. Overall, CaMeLs demonstrates that strong security measures can coexist with useful autonomy in agent design.

Investments

xAI, which builds frontier AI models and runs the Grok product suite, raised $20 billion Series E from Valor Equity Partners, Fidelity Management & Research, and Qatar Investment Authority.

ElevenLabs, the leading AI audio company, raised a $500 million Series D at an $11B valuation as it surpassed $330M in revenue.

DayOne Data Centers, which develops hyperscale data center capacity for AI and cloud workloads, raised over $2.0 billion Series C from Coatue, Indonesia Investment Authority, and Brookfield; the valuation was not disclosed.

Bedrock Robotics, which develops autonomous construction systems that apply AI and robotics to heavy equipment, raised $270 million Series B at a $1.75 billion valuation from CapitalG and the Valor Atreides AI Fund.

Skild AI, which is building a general-purpose foundation model for robotics, raised $1.4 billion Series C at a $14 billion valuation from SoftBank, NVentures, and Bezos Expeditions.

Waabi, which develops an AI-first autonomy stack for trucks and robotaxis, raised up to $1.0 billion Series C at a $3.0 billion valuation from Uber, Khosla Ventures, and Volvo.

StepFun, which builds large foundation models in China, raised $718 million Series B+ from Shanghai SDIC Leading Fund, China Life Private Equity Investment, and Pudong Venture Capital; the valuation was not disclosed.

Zipline, which operates autonomous drone delivery networks for healthcare and commerce, raised $600 million in a financing round at a $7.6 billion valuation from Valor Equity Partners, Tiger Global, and Fidelity Management & Research.

RobCo, which builds AI-driven modular robotic arms for industry, raised $100 million in a financing round from Volkswagen’s venture arm and Exor (the Agnelli family’s investment firm).

Upwind, which provides runtime cloud security for production workloads, raised $250 million Series B at a $1.5 billion valuation from Bessemer Venture Partners, Salesforce Ventures, and Picture Capital.

ClickHouse, which develops an open-source analytical database increasingly used for AI workloads, raised $400 million Series D at a valuation that was not disclosed from Khosla Ventures with participation from BOND and IVP.

Replit, which provides an AI-native coding and software development platform, raised a financing round at a $9 billion valuation led by Andreessen Horowitz; the amount raised was not disclosed.

Converge Bio, which uses AI-driven protein design to accelerate drug discovery, raised $25 million Series A led by Bessemer Venture Partners with participation from executives from Meta, OpenAI, and Wiz.

Torq, which builds AI-driven security operations automation software, raised $140 million in a financing round at a $1.2 billion valuation led by Insight Partners with participation from SentinelOne Ventures.

Harmattan AI, which develops AI systems for autonomous aviation and defense applications, raised $200 million Series B led by Dassault Aviation with participation from strategic and institutional investors.

Hadrian, which builds AI-enabled factories for aerospace and defense manufacturing, raised a financing round; the amount and valuation were not disclosed.

Automata, which builds AI-ready lab automation hardware and software for life sciences, raised $45 million Series C led by Dimension with participation from Danaher Ventures and Octopus Ventures; the valuation was not disclosed.

Positron AI, which develops energy-efficient AI inference chips and systems, raised $230 million Series B at a post-money valuation exceeding $1 billion from ARENA Private Wealth, Jump Trading, and Unless with strategic investment from the Qatar Investment Authority and Arm; the valuation was not disclosed beyond “exceeding $1 billion.”

Phylo, which is building an integrated “AI-native biology” workspace called Biomni Lab, raised $13.5 million seed funding co-led by Andreessen Horowitz and Menlo Ventures’ Anthology Fund with participation from Zetta, Conviction, and SV Angel.

Poetiq, which is developing a software layer to improve LLM performance without retraining, raised $45.8 million seed funding from Surface and FYRFLY with participation from Y Combinator and 468 Capital; the valuation was not disclosed.

Adapt, which is building an “AI computer for business” that connects to enterprise tools and workflows, raised $10 million seed funding co-led by Activant Capital and Headline; the valuation was not disclosed.

Waymo, the autonomous ride-hailing company, raised $16 billion in a financing round at a $126 billion post-money valuation led by Dragoneer Investment Group with participation from Sequoia Capital and DST Global.

Fundamental, which applies AI to large-scale data analysis using a research-driven approach to querying and reasoning over complex datasets, raised $255 million Series A at a valuation that was not disclosed from Sequoia Capital and Andreessen Horowitz.

Rogo, which builds AI-powered financial analysis and research tools for investment professionals, raised $400 million Series C at a $2.8 billion valuation led by Coatue with participation from General Catalyst and Thrive Capital.

Decagon, which develops AI agents for automating customer support and enterprise workflows, raised $150 million Series C at a valuation that was not disclosed led by Accel with participation from Andreessen Horowitz and Index Ventures.

Emergent, which lets users build apps with an AI “vibe-coding” platform, raised $70 million Series B at a $300 million valuation led by SoftBank’s Vision Fund 2 and Khosla Ventures.

Synthesia, which helps enterprises create AI-generated training videos and interactive avatars, raised $200 million Series E at a $4.0 billion valuation from GV, NVentures, and NEA.

Inferact, which commercializes the open-source vLLM inference engine, raised $150 million seed funding at an $800 million valuation from Andreessen Horowitz, Lightspeed Venture Partners, and Sequoia Capital.

Deepgram, which provides real-time speech-to-text and voice AI APIs, raised $130 million Series C at a $1.3 billion valuation from AVP, Madrona, and In-Q-Tel.

Goodfire, which develops tools to interpret, debug, and control the internal representations of large AI models, raised $300 million Series B at a $1.25 billion valuation led by Sequoia Capital with participation from Lightspeed Venture Partners and Menlo Ventures.

Humans&, which is developing AI tools to enhance human collaboration, raised $480 million seed funding at a $4.48 billion valuation from Nvidia, Jeff Bezos and GV.

Flapping Airplanes, which is a foundational AI research lab focused on developing less data-hungry training methods for advanced models, raised $180 million seed funding at a $1.5 billion valuation from Google Ventures, Sequoia Capital and Index Ventures.

Listen Labs, which provides an AI-first customer research platform that conducts large-scale voice and video interviews to generate real-time insights for product and marketing teams, raised $69 million Series B led by Ribbit Capital.

Exits

xAI, which develops frontier large language models and the Grok consumer AI product, was merged into SpaceX for an undisclosed amount.

Q.ai, the secretive developer of machine-learning methods for audio enhancement and whispered-speech interpretation, was acquired by Apple for nearly $2 billion.

Shanghai Biren Technology, which designs GPUs and AI computing systems, completed a $717 million IPO in Hong Kong.

MiniMax Group, which develops large language models and consumer AI apps, completed a $619 million IPO in Hong Kong.

Z.ai, which develops large language models in China, completed a $558 million IPO in Hong Kong.

AllTrue, which provides AI trust, risk, and security management tooling, was acquired by Varonis for $125 million.

OfOne, which builds voice AI for restaurant and drive-thru ordering, was acquired by Deepgram; the acquisition price was not disclosed.

Common Sense Machines, which develops generative AI systems that create 3D assets from 2D images, was acquired by Alphabet; the acquisition price was not disclosed.

Lightning AI, which offers a cloud platform for building and running AI applications, merged with GPU provider Voltage Park in a deal valuing the combined company at over $2.5 billion.

Rotron Aero, which develops long-range unmanned aerial systems and autonomous strike platforms, was acquired by NASDAQ-listed Ondas, which builds AI-enabled autonomous aerial systems and communications platforms for defense, public safety, and critical infrastructure; the acquisition price was not disclosed.

Langfuse, which provides observability and monitoring tools for large language model applications, was acquired by ClickHouse; the acquisition price was not disclosed.

Human Native, which develops tools to help enterprises deploy AI systems responsibly and at scale, was acquired by Cloudflare; the acquisition price was not disclosed.

Grove AI, which develops AI tools for life sciences and clinical research, was acquired by Hippocratic AI; the acquisition price was not disclosed.

Faculty, which provides applied AI consulting and systems integration services, was acquired by Accenture for $1B.

Thanks for reading!