The dawning AI-first era is creating clear winners and losers. We’re seeing giants of the past lose ground, while companies that few initially believed in defy their sceptics. At the same time, the terms of big macro debates in the field seemingly change overnight. In this “vibe shifts” series, we’ll be diving into some of these stories and drawing a few lessons for entrepreneurs and investors.

Introduction

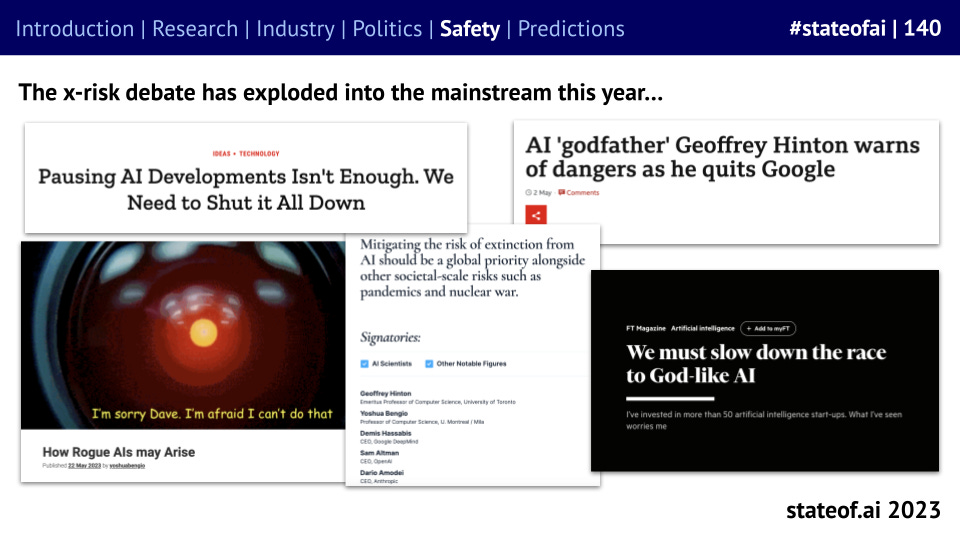

As we compiled this year’s State of AI Report, it was striking to see how one debate in particular had changed: safety.

While we had our longest ever section on safety-related research, spanning jailbreaking, ‘stealth’ attacks, national resilience efforts, and more, the debate seemed much cooler. This stands in stark contrast to the previous year, when Yann LeCun was going into battle every day on (then) Twitter against voices who wanted to pause AI development altogether.

In 2023, Anthropic CEO Dario Amodei had issued Congress a stark warning on biorisk. In 2024, he was writing a 15,000 word techno-optimist manifesto.

So what’s changed?

When the facts change, the vibes change with them

On one level, Team Safety got a lot of what they wanted. While the most extreme voices remain shut out, it is obviously true that governments clearly take these questions much more seriously.

Despite facing initial scepticism, the UK has managed to build a genuinely world-leading institution focused on AI safety. Anthropic, Google DeepMind, and OpenAI submit their most powerful models to the UK AI Safety Institute or its US counterpart for pre-deployment testing. Various US national security-focused bodies were given early previews of o1.

Provided you don’t want to bomb GPU clusters, you should be reasonably happy with the last year or so of regulatory progress.

Labs, which once underhired safety researchers (if they did at all), now publish mountains of safety-related research. And much of this research, just … isn’t that scary.

In this year’s report, for example, we covered some eye-catching results. It’s noteworthy, however, that many of them involved using highly-convoluted attacks that only worked under specific conditions that would be challenging to replicate in the real-world.

Secondly, AI commentary has been the victim of Amara’s Law: we overestimate the short-term, but underestimate the long-term impact of technology. Model capabilities have undoubtedly improved significantly over the past few years, but many of the most breathless predictions about dangerous capabilities simply haven’t come true.

As we’ve covered before, AI biorisk currently remains more hypothetical than real. Meanwhile, agentic systems remain too brittle to be useful for many of the most sophisticated malicious uses that were the subject of speculation. After all, we’re old enough to remember the panic around AutoGPT…

Every time a major election approaches, pundits predict that our information ecosystem will collapse as a result of deep fakes or AI-generated misinformation. This is yet to occur, while studies suggest that misinformation remains a peripheral, overhyped phenomenon.

This has likely removed some of the abject panic from the debate.

Reality bites

There are, of course, various practical reasons for this change.

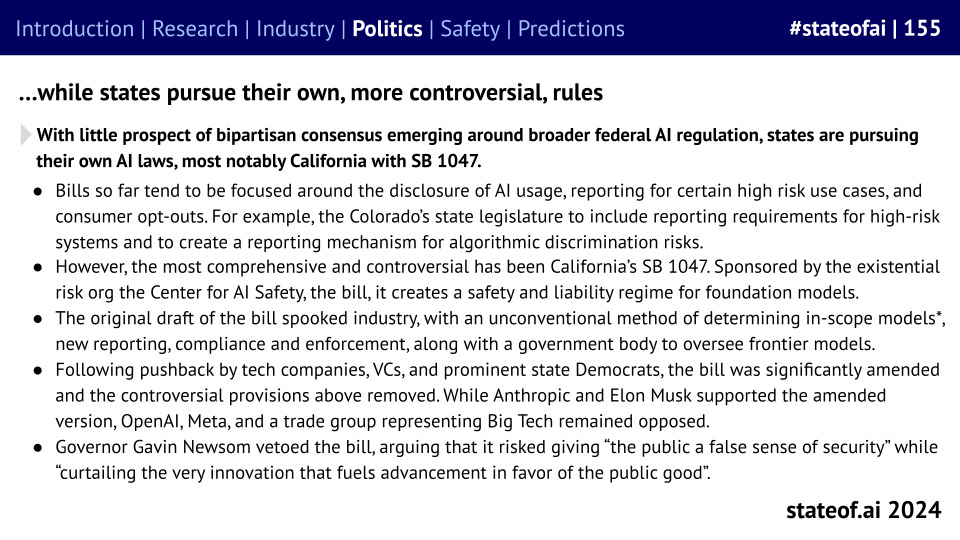

We’ve argued before against the notion that AI safety is some form of regulatory capture ploy or guerilla marketing tactic. While there’s a theoretical universe in which this could be true, it doesn’t actually explain the actions of any one company particularly well. If this were the case, you’d expect to see big tech companies support sweeping regulatory measures like SB1047 in California, which would undoubtedly have harmed their open source competitors. Instead, they either opposed the bill or lobbied hard to weaken it.

However, it is obviously the case that successful multi-billion dollar companies are swayed by the political climate to some extent. When a lifelong Democrat like Mark Zuckerberg gives money to Donald Trump’s inaugural fund, it’s not out of an altruistic desire to ensure the new President has a good party.

For a start, these companies do need to make money eventually. Regulation that hurts them, even if it hurts their competitors as well, isn’t particularly helpful when you’re trying to fundraise and it’s unclear if your business model actually works. Seeing intense x-risk impulses translated into regulatory proposals, California-style, likely made companies sit up and rethink.

Secondly, thanks to (geo)political shifts - expectations of companies have changed. Back in 2022/23, most tech companies were keen to emphasize their political neutrality. Sam Altman expressed the hope that OpenAI would never have to adjudicate values conflicts, leaving people to finetune models themselves, within certain very broad bounds.

As the return of Donald Trump to the White House became likelier, tech companies rushed to rebrand themselves as champions of American values. Meta, OpenAI, and Anthropic are now either allowing the military to use their technology, while the latter two are partnering with the likes of Anduril and Palantir.

The incoming administration is unlikely to be overly-concerned with safety. The Republican Party official platform pledged to repeal the Biden White House Executive Order on frontier models, while senior GOP politicians seem interested in only narrowly-targeted regulation. Now is not the time to appear opposed to faster American technological progress.

Closing thoughts

The ‘vibe shift’ on safety is a salutary reminder that even apparently powerful memes can collide with reality. However loud a debate gets inside the social media echo chamber, political and economic reality have a tendency to assert ourselves.

It’s also important to remember that just because a conversation moves on, it doesn’t mean nothing has changed. The x-risk wars of 2023 did change things: whether it’s new institutions or new research. Just because the narrative doesn’t determine reality, it often leaves its mark.

What does this mean? "However loud a debate gets inside the social media echo chamber, political and economic reality have a tendency to assert ourselves."